Microsoft Content Moderator helps boost your Spam Detection and Content Moderation efforts. Gain efficiency, save time, and protect your brand seamlessly. Secure your online presence now!

Say Goodbye to Manual Spam Detection and Content Moderation – Hello Microsoft Content Moderator

Ever feel like you’re playing whack-a-mole with spam and inappropriate content?

The internet’s a wild place.

Every minute, there are millions of interactions. Comments, posts, user-generated content – it’s a goldmine for connection, but also a battlefield for brand safety.

Dealing with toxic content, spam, and policy violations? It’s a massive drain.

It eats up resources, time, and sanity.

Many businesses are stuck in this loop. They’re manually reviewing, reacting, and often, falling behind.

The cost of getting it wrong? It’s not just a bad look. It’s reputation damage, legal issues, and a user base that vanishes.

That’s where AI steps in.

Especially in Security and Moderation.

This isn’t about replacing humans. It’s about empowering them.

It’s about making the impossible, possible.

Enter Microsoft Content Moderator.

This isn’t just another tool. It’s a strategic partner.

It’s designed to tackle the biggest headaches in online content: spam, hate speech, explicit images, and more.

So, if you’re tired of fighting content battles with one hand tied behind your back, keep reading.

Because everything’s about to change.

Table of Contents

- What is Microsoft Content Moderator?

- Key Features of Microsoft Content Moderator for Spam Detection and Content Moderation

- Benefits of Using Microsoft Content Moderator for Security and Moderation

- Pricing & Plans

- Hands-On Experience / Use Cases

- Who Should Use Microsoft Content Moderator?

- How to Make Money Using Microsoft Content Moderator

- Limitations and Considerations

- Final Thoughts

- Frequently Asked Questions

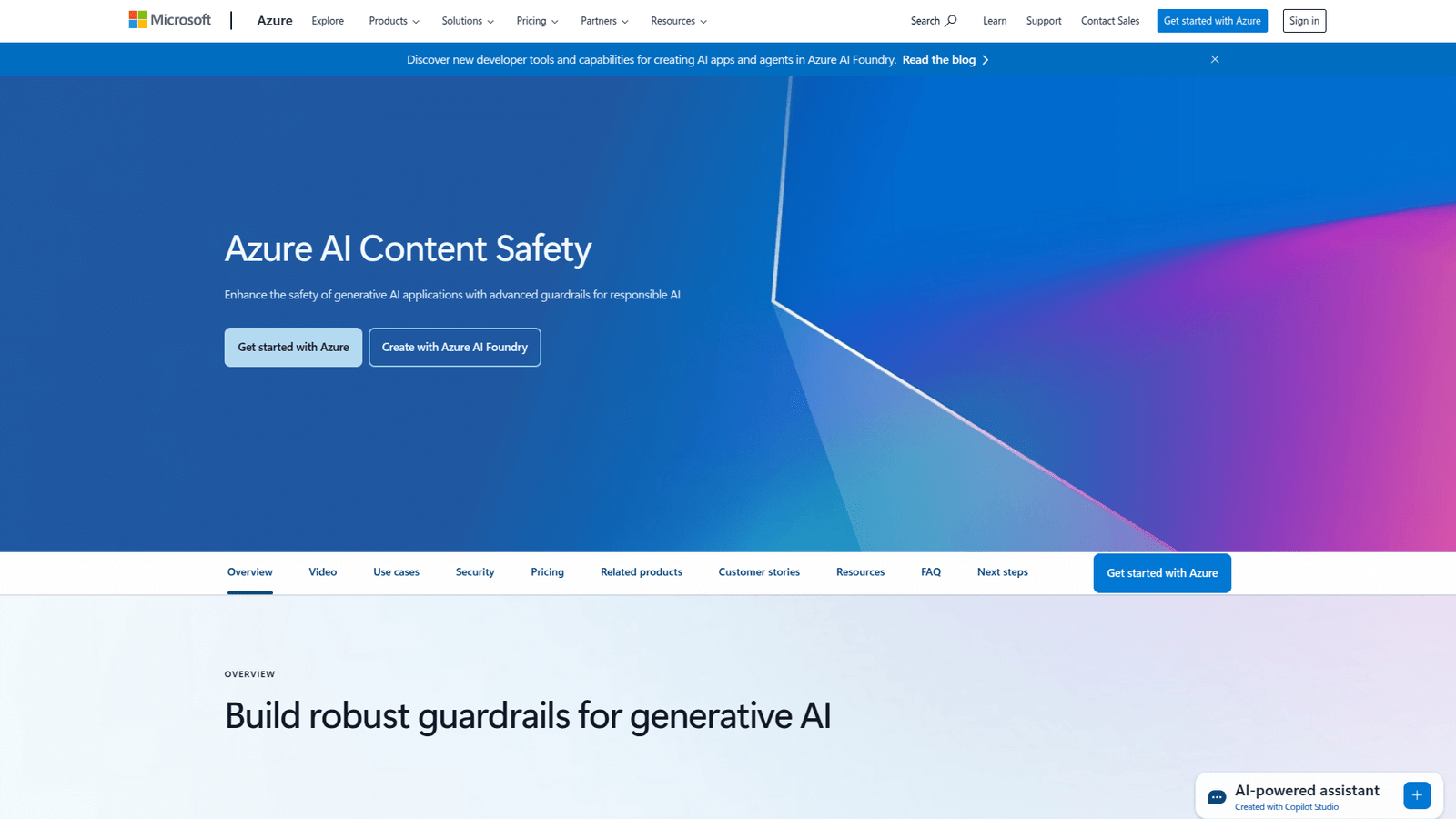

What is Microsoft Content Moderator?

Okay, let’s get straight to it.

What exactly is Microsoft Content Moderator?

Think of it as your digital bouncer. It stands at the door of your online platforms, checking every piece of content before it gets in.

It’s an AI service. Part of Microsoft Azure’s Cognitive Services.

Its core job? To help you find and filter out undesirable content. Fast.

This isn’t just about deleting spam. It’s about identifying a whole range of problematic stuff.

That includes text, images, and videos.

It’s built for businesses, developers, and organizations of all sizes.

Anyone who handles user-generated content.

If you’ve got forums, comment sections, social feeds, gaming platforms, or e-commerce sites, this tool is for you.

It works by using machine learning models. These models are trained on massive datasets.

They can spot patterns and characteristics of bad content.

It helps keep your brand safe.

It helps protect your users.

It helps maintain a positive online environment.

Instead of having a massive team manually reviewing every single post, you get an automated first line of defense.

This frees up your human moderators.

They can then focus on the nuanced cases. The ones AI might struggle with.

It’s about efficiency. It’s about scale.

It’s about making a tough job a lot easier.

The goal isn’t to be perfect right out of the box.

It’s to give you a powerful head start.

A solid foundation for your content policies.

It takes away the grunt work. The repetitive, soul-crushing tasks.

And it puts the power back in your hands.

You define the rules. You set the thresholds.

Microsoft Content Moderator enforces them.

It’s about control. It’s about peace of mind.

No more waking up to PR disasters caused by unmoderated content.

This tool helps you sleep better.

That’s what it is. A smart, automated guardian for your digital spaces.

Key Features of Microsoft Content Moderator for Spam Detection and Content Moderation

Alright, let’s break down what this thing actually does.

It’s got a suite of tools. Each designed to handle a specific type of content moderation problem.

- Text Moderation: Spotting the Nasty Bits in Words

This is fundamental. It scans text for a bunch of issues.

Think profanity. Hate speech. Sexually explicit language.

It uses a customizable profanity list. You can add your own terms.

It detects potential personally identifiable information (PII) too. Like email addresses or phone numbers.

Huge for compliance.

It also flags potential spam. Long, repetitive messages. URLs in unexpected places.

This helps cut down on the noise.

It gives you a confidence score for each flag.

So you know how likely it is to be problematic.

You can then set your own thresholds. Auto-block anything above 90%. Review anything between 50-89%.

It makes Spam Detection and Content Moderation so much faster.

No more reading every single comment.

- Image Moderation: Visual Scrutiny for Your Platforms

This is where it gets really powerful.

Images are a huge vector for bad content. Explicit imagery. Graphic violence. Child exploitation.

Microsoft Content Moderator scans images automatically.

It identifies adult and racy content.

It can detect text within images. That’s OCR (Optical Character Recognition).

So even if someone tries to embed banned words in an image, it catches it.

It can also match faces. Or flag potential celebrities.

This feature is critical for user-generated content platforms.

Think about profile pictures, shared photos, product listings.

It offers categories and confidence scores for each type of detected content.

This provides an objective assessment.

It’s a huge win for brand safety.

And for keeping your community clean.

- Video Moderation: Keeping Moving Pictures Clean

Video is even harder to moderate manually.

It’s time-consuming. It’s labor-intensive.

Microsoft Content Moderator tackles this head-on.

It can process video frames. Extracting keyframes for image analysis.

It can also transcribe audio. Then apply text moderation to the spoken words.

This means it catches visual issues. And audible issues.

Even if someone tries to whisper something inappropriate in a video, it picks it up.

It can mark out time codes where problematic content appears.

This makes human review much more efficient.

They don’t have to watch the whole video. Just jump to the flagged segments.

This is a game-changer for platforms that rely heavily on video content.

Think live streaming, user-uploaded videos, educational platforms.

It significantly reduces the risk of harmful video content appearing on your site.

Protecting your users and your reputation.

- Review Tool: The Human Touch with AI Efficiency

This isn’t just about automated flagging.

It comes with a built-in review tool.

This dashboard allows your human moderators to step in.

They can view all the flagged content.

They can make final decisions. Approve, reject, or further categorize.

It presents the flagged content clearly. Along with the AI’s confidence scores.

This means no more sifting through spreadsheets or disparate systems.

Everything is centralized. Streamlined.

It also allows for feedback.

Your human moderators can correct the AI.

This helps the AI learn and improve over time.

Making it even more accurate for your specific needs.

It’s the perfect blend of machine speed and human nuance.

A powerful combination for effective moderation.

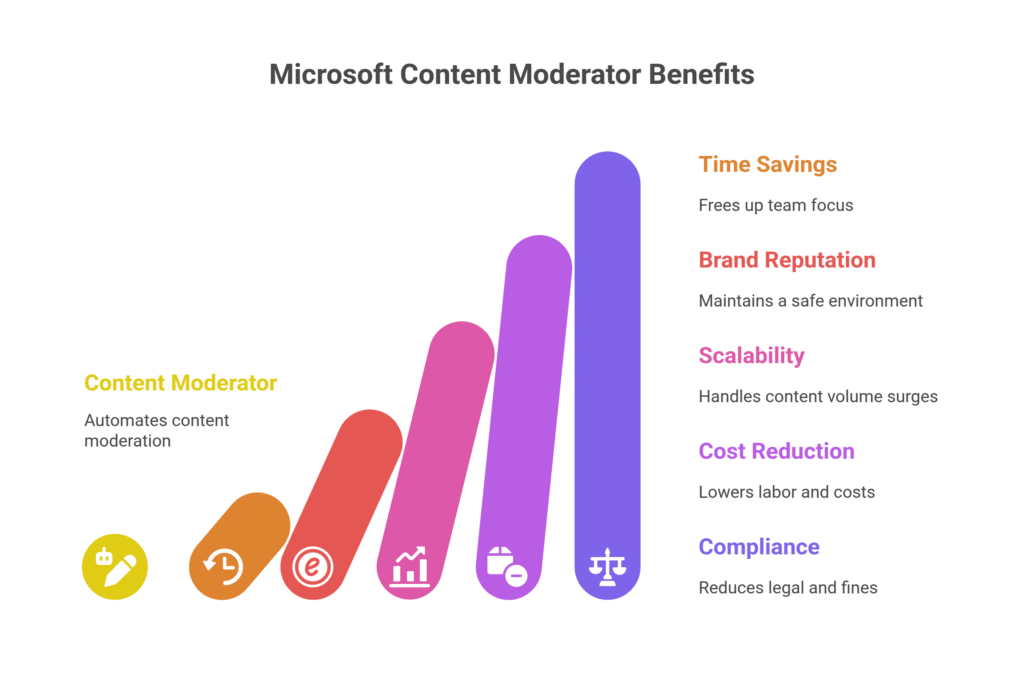

Benefits of Using Microsoft Content Moderator for Security and Moderation

Okay, so you know what it does. But why should you care?

What’s the actual payoff?

First, Massive Time Savings.

Imagine your team sifting through thousands, even millions, of user comments, images, or videos every day.

It’s not just slow. It’s impossible.

Microsoft Content Moderator automates the bulk of this work.

It can process content in seconds. Content that would take humans hours.

This frees up your team. They can focus on higher-value tasks.

Like engaging with users. Or developing new features.

It’s an immediate boost to productivity.

Next, Enhanced Brand Reputation and Trust.

Toxic content drives users away. It damages your brand.

A platform riddled with spam, hate speech, or explicit material loses credibility. Fast.

This tool helps you maintain a clean, safe online environment.

Users feel safer. They’re more likely to engage. They’re more likely to stick around.

This builds trust. And trust is gold in the digital world.

It shows you care about your community.

Another huge one: Scalability and Consistency.

As your platform grows, so does the content.

Adding more human moderators is expensive and slow.

AI scales instantly. It can handle a sudden surge in content volume without breaking a sweat.

It also applies moderation rules consistently.

Humans, bless their hearts, can be subjective.

AI follows the rules you set, every single time.

This ensures fair and predictable moderation across your platform.

Then there’s Reduced Operational Costs.

Less manual review means fewer staff hours.

Fewer staff hours mean lower labor costs.

It’s simple math.

While there’s a cost to the service, it’s often a fraction of what you’d spend on a large moderation team.

Especially for large-scale operations.

It’s an investment that pays for itself.

Finally, Improved Compliance and Risk Mitigation.

Many industries have strict regulations about user-generated content.

Think GDPR, COPPA, or industry-specific guidelines.

Failing to comply can lead to massive fines and legal headaches.

Microsoft Content Moderator helps you stay compliant.

It acts as a strong defense against problematic content.

It reduces your exposure to legal risks.

It helps protect you from reputational damage.

It’s about proactive protection. Not reactive firefighting.

These aren’t small benefits. They’re foundational.

They directly impact your bottom line. Your reputation. Your peace of mind.

It’s a strategic move for any business serious about their online presence.

Pricing & Plans

Okay, let’s talk money. Because that’s always the real question, right?

Microsoft Content Moderator is part of Azure Cognitive Services.

This means it operates on a pay-as-you-go model.

There isn’t a single, flat monthly fee like some SaaS tools.

You pay for what you use. Specifically, for the transactions you make.

What’s a transaction? It’s each API call.

So, if you send a text for moderation, that’s one text transaction.

If you send an image, that’s one image transaction.

The pricing is tiered. The more you use it, the cheaper each transaction becomes.

For example, for text moderation, you might pay a certain rate for the first million characters.

Then a lower rate for the next X million, and so on.

This applies to image and video analysis as well.

Video processing can be more complex. It might be priced per minute of video analyzed, or per frame.

They also offer a free tier.

Yes, you read that right. Free.

The free tier gives you a limited number of transactions per month.

This is awesome for testing it out. For small projects.

You can experiment. See how it fits into your workflow.

Before you commit any serious cash.

It’s like getting a free sample. But a really powerful one.

For larger operations, or if you exceed the free tier limits, you simply roll over to the standard pay-as-you-go rates.

There are no hidden fees. No long-term contracts.

You only pay for the actual usage.

How does this compare to alternatives?

Many competitors offer similar AI moderation services.

Some have subscription plans. Others have usage-based pricing.

Microsoft’s pricing is competitive. And the tiered structure can be very cost-effective for high-volume users.

Plus, being part of Azure, it integrates seamlessly if you’re already in the Microsoft ecosystem.

This can save you integration costs and headaches.

The precise pricing details are on the Azure website. They update them periodically.

So, it’s worth checking their official page for the latest rates.

But the takeaway is this: it’s flexible. It’s scalable.

And you can start testing it for free.

That’s a no-brainer for anyone curious about its capabilities.

Hands-On Experience / Use Cases

Alright, enough theory. How does this play out in the real world?

Let’s say you run a growing online gaming community.

Players are interacting constantly. Chatting, creating profiles, uploading game clips.

Before Microsoft Content Moderator, you had a small team trying to keep up.

They were drowning in reports of toxic chat. Inappropriate usernames. Nasty content in shared videos.

Response times were slow. Users got frustrated. Some even left.

Then, you integrated Microsoft Content Moderator.

Scenario 1: Chat Moderation in Real-Time

You set up the text moderation API.

Every chat message now passes through it before it’s even displayed.

A player types, “This game is [expletive] trash, and you’re all losers.”

The Content Moderator flags “expletive” and “losers” based on your configured profanity list and hate speech detection.

It gets a high confidence score.

You have rules in place: auto-redact severe profanity. Auto-block messages above a certain hate speech threshold.

The message is instantly either redacted to “This game is **** trash, and you’re all losers,” or blocked entirely.

The human moderation team only sees messages that are highly nuanced or borderline.

They get a digestible queue in the Review Tool.

Result: Cleaner chat. Happier players. Less work for your team.

Scenario 2: User Profile Picture Moderation

A new user uploads a profile picture.

Instead of a human needing to approve it, the image moderation API kicks in.

It scans for adult content, racy content, and even checks for embedded text.

If the picture contains explicit imagery, it’s flagged immediately.

Your system automatically rejects it. The user gets a notification to upload a compliant image.

If it’s a picture of a known celebrity, it might get flagged for review based on your policy.

This saves countless hours.

It prevents embarrassing, brand-damaging images from ever appearing on your site.

Scenario 3: Moderating User-Uploaded Video Clips

Players upload their epic gameplay moments.

Sometimes, these clips contain inappropriate language in the audio, or graphic violence.

The video moderation API is triggered.

It transcribes the audio, detecting profanity and offensive speech.

It also extracts keyframes, running image analysis on them.

If a clip contains flagged content, the specific time codes are noted.

Your human moderators get a report. They jump directly to the flagged segments.

They don’t need to watch the entire 10-minute video. Just the 5-second problematic part.

This drastically cuts down review time.

It ensures you’re not hosting harmful content for long.

The usability is straightforward for developers.

It’s an API. So, you integrate it into your existing systems.

The documentation is extensive. Sample code is available.

For the moderation team, the Review Tool dashboard is intuitive.

It’s a visual way to manage flagged content.

The results? Noticeable.

Cleaner platforms. Fewer complaints. More efficient moderation teams.

It’s not just theoretical. It’s practical. It’s powerful.

Who Should Use Microsoft Content Moderator?

Who actually needs this thing?

It’s not for everyone. But for certain types of businesses, it’s a game-changer.

First up: Social Media Platforms and Community Forums.

If you run a platform where users are constantly posting, commenting, and interacting.

You’re dealing with a firehose of content.

Microsoft Content Moderator is essential for maintaining a healthy environment.

It keeps the spam out. It curbs the hate speech. It blocks the inappropriate images.

Think Reddit, smaller niche forums, or even internal company social networks.

Next: Gaming Companies.

Online gaming is notorious for toxic chat and user-generated content issues.

From in-game chat to user-created levels or skins.

Moderating that volume manually is a nightmare.

This tool helps maintain a positive experience for players.

And keeps your brand clean.

Then: E-commerce Businesses.

User reviews. Product questions and answers. User-uploaded images for products.

These are all potential vectors for spam, inappropriate content, or even fake reviews.

Content Moderator can scan reviews for profanity.

It can check product images for compliance.

This protects your brand integrity. And builds buyer trust.

Also: Educational Platforms and E-learning Sites.

Student forums. Discussion boards. User-submitted assignments.

It’s critical to maintain a safe and respectful learning environment.

This tool helps prevent bullying, cheating, and the spread of inappropriate materials.

For: Any Business with User-Generated Content (UGC).

This is broad.

Could be a dating app. A local classifieds site. A content creation platform.

If users can upload anything, you need a robust moderation strategy.

Microsoft Content Moderator provides that strategy.

It’s for businesses that want to scale without compromising on content quality or safety.

It’s for companies that understand the cost of bad content.

And want to proactively protect their users and their brand.

If you’re drowning in content moderation tasks.

If you’re worried about brand safety or legal compliance.

If you’re trying to scale your platform but manual moderation is a bottleneck.

Then Microsoft Content Moderator is likely a tool you need to seriously consider.

How to Make Money Using Microsoft Content Moderator

Alright, let’s get down to brass tacks: how do you actually turn this AI tool into cash?

It’s not a direct money-making tool.

It’s an efficiency multiplier. A problem solver.

And solving problems, especially big ones, always leads to money.

- Offer Content Moderation as a Service (CMaaS):

This is a direct play.

Many smaller businesses, startups, or niche communities can’t afford a full-time moderation team.

Or they don’t have the technical expertise to set up AI moderation.

You can. You can build a business offering content moderation.

Use Microsoft Content Moderator as your backend engine.

Your clients send their user-generated content to your API endpoint.

Your system processes it with Content Moderator.

You provide them with a clean, moderated feed.

You can charge a monthly retainer. Or per transaction.

Imagine offering a “clean comments” service for blogs, or “safe image uploads” for small e-commerce sites.

You become their content guardian.

- Boost Internal Efficiency to Free Up Resources:

This is where most businesses benefit.

If you’re already running a platform with content moderation needs, this tool slashes your operational costs.

Let’s say you have 5 full-time moderators.

After integrating Content Moderator, they can handle 5X the volume. Or, you only need 1 or 2 moderators for edge cases.

The money saved on salaries, benefits, and training? That’s pure profit.

Or, you reallocate those resources.

Instead of moderating, your team can now focus on user growth. Product development. Marketing.

These activities directly contribute to revenue.

So, it’s not just cost-saving, it’s resource optimization that drives growth.

- Protect Brand Reputation & Prevent Costly Fines:

This is indirect, but massive.

A single major content violation can destroy a brand.

Think about the public backlash from unmoderated hate speech. Or explicit content appearing on your platform.

The public relations nightmare. The lost trust. The potential boycotts.

The fines for non-compliance with regulations (like GDPR) are no joke. Millions. Billions, even.

Microsoft Content Moderator acts as a strong preventative measure.

By preventing these disasters, you’re saving potentially huge amounts of money.

And you’re preserving your most valuable asset: your brand.

This translates to continued customer loyalty. Higher user retention. And ultimately, stable or growing revenue.

Consider Sarah, who runs a popular indie game studio.

Her game’s in-game chat was a cesspool. Players complained daily. Some quit.

She implemented Microsoft Content Moderator for her chat system.

Within weeks, the toxicity dropped by 80%.

Player retention improved significantly. New players joined and stayed.

Her team could now focus on game updates instead of constantly moderating.

This led to more game sales. Happier players. And a reputation for a safe, fun gaming environment.

She wasn’t directly “selling” moderation. She was selling a better game experience. Powered by moderation.

That’s how you make money with this tool. By solving real problems.

By making your core business better, safer, and more efficient.

Limitations and Considerations

Look, no tool is magic.

Microsoft Content Moderator is powerful. But it’s not perfect.

You need to be aware of its edges.

First, Accuracy and Nuance.

AI is good. It’s not human.

Sarcasm, irony, context-specific slang, subtle hate speech – these are still hard for AI.

A perfectly innocent phrase in one context could be highly offensive in another.

The AI will give you a confidence score. But a high score doesn’t always mean it’s 100% correct.

False positives (flagging good content) and false negatives (missing bad content) can happen.

This means you still need human oversight.

The Review Tool is critical for this.

Your team validates the AI’s decisions. And helps it learn over time.

Next, Customization and Training.

Out of the box, it’s generic.

To get the best results, you need to customize it.

This means adding your own custom term lists.

Training it on your specific content.

If you run a gaming platform, “GG” means “good game.” For a general AI, it might mean nothing.

You’ll need to feed it examples. Give it feedback.

This takes effort. It’s not a plug-and-play solution where you just flip a switch and it’s perfect.

It’s an ongoing process of refinement.

Then there’s the Learning Curve for Integration.

This is an API-based service.

It means you need developers. Or someone with technical skills.

You’re not just downloading software and clicking “install.”

You’re integrating it into your existing platform.

This requires coding. Understanding APIs. Setting up authentication.

For small businesses without a tech team, this could be a barrier.

Consider the initial setup time and resources required.

Also, Cost for High Volume.

While the pay-as-you-go model is flexible, extremely high volumes can add up.

If you’re processing billions of transactions, those fractions of a cent per transaction start to become real money.

You need to monitor your usage. And budget accordingly.

It’s cheaper than human review at scale, but it’s not free.

Finally, Evolving Content and Evasion Tactics.

Bad actors are always adapting.

They find new ways to bypass moderation. Creative spellings of profanity. New slang. Obscure symbols.

The AI models are updated by Microsoft. But there will always be a lag.

You’ll need to stay vigilant.

And update your custom lists regularly.

It’s an ongoing arms race.

So, while Microsoft Content Moderator is a massive leap forward, it’s not a magic bullet.

It requires planning, integration effort, and ongoing human involvement.

But for the problems it solves, these considerations are often small prices to pay.

Final Thoughts

So, what’s the real deal with Microsoft Content Moderator?

It’s not just another piece of tech.

It’s a strategic shift for anyone serious about Security and Moderation.

We’re in an age where online platforms are under constant threat from spam, toxicity, and harmful content.

Manual moderation simply doesn’t scale. It’s too slow, too expensive, and frankly, too soul-crushing for human teams.

Microsoft Content Moderator steps in as your automated first line of defense.

It handles the grunt work. The repetitive, high-volume analysis of text, images, and videos.

It identifies the obvious violations. It flags the suspicious content.

This frees up your human moderators to focus on the truly complex, nuanced cases.

The situations where human judgment is irreplaceable.

The benefits are clear: you save massive amounts of time.

You drastically cut operational costs.

You protect your brand’s reputation.

You foster a safer, more positive online environment for your users.

And you stay compliant with an ever-growing list of regulations.

Yes, it requires integration. Yes, it needs some tuning.

But the payoff is huge.

It’s an investment in your platform’s longevity. Its growth. Its safety.

It’s not just about filtering out the bad stuff.

It’s about building a better, more trustworthy online experience.

For everyone.

If you’re running any platform with user-generated content, you simply cannot afford to ignore this.

It’s how the pros are doing it.

It’s how you get ahead.

So, stop fighting content battles with a butter knife.

Grab a better tool.

Give Microsoft Content Moderator a serious look.

It might just be the most impactful decision you make for your platform this year.

Visit the official Microsoft Content Moderator website

Frequently Asked Questions

1. What is Microsoft Content Moderator used for?

Microsoft Content Moderator is an AI service used for detecting and filtering out potentially offensive, inappropriate, or spam content from text, images, and videos. It helps businesses maintain a safe and clean online environment for their users.

2. Is Microsoft Content Moderator free?

Microsoft Content Moderator offers a free tier with a limited number of transactions per month, allowing users to test its capabilities. Beyond the free tier, it operates on a pay-as-you-go model, where you only pay for the volume of content you process.

3. How does Microsoft Content Moderator compare to other AI tools?

Microsoft Content Moderator is a robust tool within the Azure ecosystem, offering strong capabilities for text, image, and video moderation. Its key differentiator often lies in its seamless integration with other Microsoft services and its pay-as-you-go scalability, making it competitive with other leading AI moderation solutions.

4. Can beginners use Microsoft Content Moderator?

While the Review Tool interface is user-friendly for human moderators, integrating Microsoft Content Moderator into an application typically requires some technical knowledge, as it’s an API-based service. Developers will find it straightforward to implement with proper documentation.

5. Does the content created by Microsoft Content Moderator meet quality and optimization standards?

Microsoft Content Moderator doesn’t “create” content. Instead, it moderates existing content by identifying and flagging problematic material based on predefined rules and machine learning models. Its output helps ensure that user-generated content on your platform meets your quality and safety standards, improving the overall user experience and protecting your brand.

6. Can I make money with Microsoft Content Moderator?

Yes, indirectly. By using Microsoft Content Moderator, you can save significant operational costs related to manual content review, which boosts your profit margins. You can also offer content moderation as a service to other businesses, leveraging the tool to provide a specialized service and generate revenue. Furthermore, by maintaining a clean platform, you protect your brand reputation and foster user trust, which contributes to long-term business growth and profitability.