Google Perspective API revolutionizes Spam Detection and Content Moderation. Save time, improve accuracy, and protect your platform. Get started today!

Why Google Perspective API Is a Smart Choice for Spam Detection and Content Moderation

Alright, let’s talk about something real.

If you’re running an online platform, managing a community, or just dealing with user-generated content, you know the headache.

Spam.

Hate speech.

Toxic comments.

It’s a constant battle.

And it takes up serious time and resources.

Time you could be spending growing, building, making money.

Instead, you’re sifting through junk, playing whack-a-mole with bad actors.

Manual moderation?

It’s slow.

It’s draining.

And honestly, it’s not even that effective at scale.

You miss stuff.

Stuff gets through.

Your platform suffers.

Your users suffer.

Enter AI.

Specifically, AI tools built for this exact problem.

Tools that can process text, understand context, and flag harmful content faster and more consistently than any human ever could alone.

One name keeps coming up in Security and Moderation conversations.

Google Perspective API.

This isn’t just another piece of tech.

It’s a potential game-changer.

Especially if your goal is to clean up your digital space, protect your users, and free up your team.

I dug into it.

I looked at how it works.

What it promises.

And what it actually delivers.

If you’re wrestling with Spam Detection and Content Moderation, stick around.

This might be the solution you’ve been looking for.

Table of Contents

- What is Google Perspective API?

- Key Features of Google Perspective API for Spam Detection and Content Moderation

- Benefits of Using Google Perspective API for Security and Moderation

- Pricing & Plans

- Hands-On Experience / Use Cases

- Who Should Use Google Perspective API?

- How to Make Money Using Google Perspective API

- Limitations and Considerations

- Final Thoughts

- Frequently Asked Questions

What is Google Perspective API?

Okay, straight talk.

What is Google Perspective API?

Think of it as a super-powered text analysis tool.

Built by Google, no less.

Its main gig?

It uses machine learning to score the potential impact a comment or text might have on a conversation.

Specifically, how likely it is to be perceived as harmful or toxic.

It doesn’t just flag bad words.

That’s too basic.

It tries to understand the *intent* and the *severity*.

Is this comment just disagreeing, or is it actively attacking someone?

Is this spam, or a genuine question?

It works by looking at various “attributes” of the text.

Things like Toxicity, Severe Toxicity, Identity Attack, Insult, Threat, Sexual Explicit, and more.

You send it a piece of text – a comment, a post, whatever.

It sends back scores for these different attributes.

A high Toxicity score means the model thinks it’s likely to be perceived as toxic.

A low score means it’s probably fine.

Who is this built for?

Anyone who deals with user-generated content at scale.

Online communities, social media platforms, news websites, forums, game chats, e-commerce sites with reviews.

If people can type stuff on your platform, you probably need this.

It’s a tool for developers and moderation teams.

You integrate it into your existing systems.

It’s not a standalone app you log into and click buttons.

It’s an API.

You send it data programmatically, it sends data back.

This allows you to automate parts of your moderation workflow.

Filter comments before they go live.

Prioritise comments for human review.

Automatically remove or hide the worst stuff.

It’s designed to handle the sheer volume of content that pours in.

Because let’s be real, manual moderation simply can’t keep up anymore.

Especially not if you’re growing.

So, in short: Google Perspective API is an AI tool that scores text for potential toxicity and other harmful attributes, helping platforms automate and improve their content moderation efforts.

It’s about making your online space cleaner and safer.

Without needing an army of moderators.

Key Features of Google Perspective API for Spam Detection and Content Moderation

- Attribute Scoring:

This is the core of it.

Perspective API doesn’t just say “this is bad”.

It gives you scores for different types of badness.

Toxicity, Severe Toxicity, Identity Attack, Insult, Threat, Sexual Explicit, Profanity, Spam, and Flirtation.

Each attribute gets a score, usually between 0 and 1.

A score of 0.9 for “Toxicity” means the model is highly confident the text is toxic.

A score of 0.1 means it’s probably not.

This granular data is crucial for Spam Detection and Content Moderation.

You can set thresholds.

Maybe anything over 0.8 Toxicity gets removed instantly.

Anything between 0.6 and 0.8 gets flagged for human review.

Anything below 0.6 goes through.

This allows for nuanced filtering, not just a blunt “good or bad” assessment.

It helps distinguish between genuinely harmful content and passionate but acceptable discussion.

- Spam Attribute:

Okay, specific to the topic here: Spam.

Perspective API has a dedicated “Spam” attribute.

This model is trained specifically to detect content that looks like spam.

Think irrelevant links, repetitive phrases, promotional messages, bots posting nonsense.

Dealing with spam is different from dealing with hate speech.

Spam clutters your platform.

It makes it harder for real users to find valuable content or engage.

The Spam attribute gives you a direct way to score this type of unwanted content.

You can use this score to filter out obvious spam automatically.

Or flag it for review by your team.

It’s a powerful layer in your defence against low-quality or malicious content.

- Scalability and Speed:

This is where AI really shines compared to humans.

Perspective API can process massive volumes of text extremely quickly.

Whether you have ten comments a day or ten million, it can handle it.

It provides near real-time scoring.

You can integrate it so that comments are analysed the moment they are posted.

This means you can catch harmful content *before* it’s seen by many users.

Or remove spam instantly, preventing it from cluttering feeds.

Speed is crucial in moderation.

A toxic comment left up for hours can cause significant damage.

Spam lingering around makes your site look unprofessional.

Perspective API helps you react fast.

It massively reduces the time between a harmful post appearing and it being dealt with.

This efficiency is a major reason platforms turn to AI for Security and Moderation.

Benefits of Using Google Perspective API for Security and Moderation

Why bother with integrating this?

What’s the payoff?

First off, Massive Time Savings.

Manual moderation is a time sink.

Your team spends hours reading comments, trying to figure out if they cross the line.

Perspective API automates the first pass.

It flags the obvious stuff, both toxic content and spam.

Your human moderators can then focus on the edge cases.

The comments that are tricky to judge.

This frees up your team for higher-value tasks.

Tasks that actually move the needle, like community building or content creation.

Next, Improved Accuracy and Consistency.

Humans make mistakes.

They get tired.

Their judgment can be inconsistent.

What one moderator flags, another might not.

Perspective API provides a consistent, data-driven assessment based on its training data.

It applies the same rules every time.

This leads to more consistent moderation decisions across your platform.

While not perfect (AI can make mistakes too, more on that later), it provides a strong baseline.

You also get Better User Experience.

A platform overrun with spam, insults, and threats is unpleasant.

Users leave.

They don’t feel safe or welcome.

By effectively tackling Spam Detection and Content Moderation, you create a cleaner, more positive environment.

This encourages healthy discussion and keeps users engaged.

A clean community attracts more users and retains existing ones.

It’s directly tied to the health and growth of your platform.

Then there’s Reduced Moderator Burnout.

Reviewing harmful content all day is emotionally taxing.

It’s tough work.

Automating the most egregious content reduces the load on your human moderators.

They see less disturbing content, less often.

This can significantly improve their well-being and job satisfaction.

Happy moderators are effective moderators.

Finally, Scalability.

As your platform grows, so does the volume of content.

Scaling a human moderation team is expensive and complex.

Scaling with Perspective API is much simpler.

It can handle exponentially more requests without a proportional increase in cost or effort on your end.

It grows with you.

These aren’t small wins.

They are fundamental improvements to how you manage your online community and protect your brand.

Pricing & Plans

Okay, the money question.

Is this going to cost a fortune?

Google Perspective API is part of Google Cloud Platform.

Specifically, it falls under the Jigsaw products, focused on fighting toxicity.

The pricing is based on usage.

You pay per API request.

A request is typically defined as sending a piece of text to the API for analysis, asking for scores on one or more attributes.

Google offers a free tier.

This is great for trying it out, for small projects, or platforms with low volume.

The free tier gives you a certain number of API calls per month at no charge.

The exact free limits can change, so always check the official Google Cloud pricing page.

But it’s usually enough to get a feel for how it works and see if it’s right for you.

Beyond the free tier, you move into paid usage.

The cost per request is relatively low.

It’s priced in fractions of a cent per API call.

However, this can add up quickly if you have millions of comments or posts per day.

There are different models you can use, too.

The standard API provides scores for the general toxicity attributes.

Some attributes, like the “Spam” attribute, might have different pricing or availability.

It’s pay-as-you-go after the free tier.

No fixed monthly subscription unless you’re on a custom enterprise plan.

This is good because your costs scale directly with your usage.

If you have a quiet month, your bill is low.

If you have a viral moment and traffic explodes, your costs go up, but the moderation keeps working.

Compared to alternatives?

Other AI moderation APIs exist, from companies like OpenAI (though their models are more general purpose), Amazon (Rekognition for images, but text analysis is different), or specialized moderation platforms.

Pricing structures vary.

Some have fixed monthly fees based on volume tiers.

Some are also pay-per-use.

Google’s pricing is competitive, especially considering the backing of Google’s AI expertise and infrastructure.

It’s designed for scale and reliability.

The key is to estimate your volume.

How many pieces of text do you need to analyse per day, week, or month?

Use the pricing calculator on the Google Cloud website.

Factor in which attributes you plan to use.

Start with the free tier.

Test it out.

See the volume of calls you actually make.

Then you can project your costs as you scale up.

It’s an operational cost, yes, but compare it to the cost of hiring more human moderators or the cost of a toxic, spam-filled platform (lost users, damaged reputation).

For many platforms, the ROI on AI moderation tools like this is significant.

It saves you money in the long run by increasing efficiency and protecting your community.

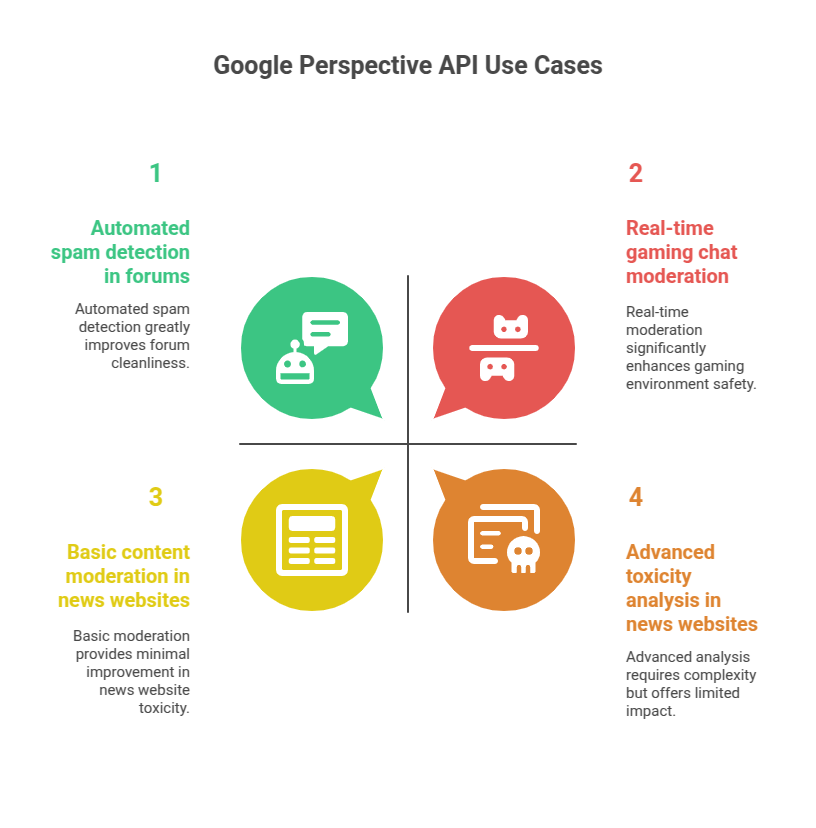

Hands-On Experience / Use Cases

Alright, how does this actually play out in the real world?

Let’s look at how platforms actually use Google Perspective API for Spam Detection and Content Moderation.

Imagine you run an online forum dedicated to niche hobby.

User engagement is good, but spam bots are starting to appear.

They post links to shady websites, irrelevant promotions, pure junk.

Manually deleting these takes up valuable admin time.

You integrate Perspective API and specifically enable the “Spam” attribute.

Now, every new post or comment is sent to the API.

If the “Spam” score comes back above, say, 0.9, the system automatically marks it as spam.

These posts can be hidden immediately or sent to a separate queue for a quick human check if needed.

Result?

Most spam is gone before users even see it.

The forum stays clean.

Admins spend minutes on spam per day, not hours.

Another case: A news website with a lively comments section.

Discussions can get heated.

Sometimes, they cross the line into personal attacks, insults, or threats.

This drives away civil commenters and makes the section a toxic mess.

Integrating Perspective API allows them to score comments for Toxicity, Insult, Threat, etc.

Comments with very high scores (e.g., Toxicity > 0.95, Threat > 0.8) are automatically removed.

Comments with moderate scores (e.g., Toxicity between 0.6 and 0.9) are flagged for human moderators.

They can then quickly review this prioritised list.

The API filters out the worst of the worst instantly.

It also highlights the questionable stuff that needs human nuance.

The moderation team becomes much more efficient.

They aren’t wading through thousands of comments; they’re focusing on the few hundred flagged ones.

User experience improves dramatically as the most offensive content is removed almost instantly.

Think about a gaming platform chat.

Millions of messages per day.

Rapid-fire text.

Manual moderation is impossible at this scale.

Perspective API can score messages in real-time.

High scores for Severe Toxicity, Identity Attack, or Sexual Explicit can trigger immediate mutes or bans.

This protects players, especially younger ones, from harassment and harmful language.

It makes the gaming environment safer and more enjoyable.

The usability from a developer standpoint is well-documented.

Google provides clear documentation, client libraries for popular programming languages (Python, Node.js, Java, etc.).

Integration requires coding work to send text to the API and handle the responses.

It’s not a no-code solution.

But for teams with development resources, integrating it into existing platforms (like forum software, chat servers, comment systems) is standard API work.

The results seen by platforms that implement it properly are consistent: a significant reduction in visible harmful content and spam, improved moderator efficiency, and a better experience for users.

It works by becoming a fundamental layer of the platform’s infrastructure.

Who Should Use Google Perspective API?

So, is this tool for everyone?

Probably not every single person on the planet.

But if you fit certain profiles, it’s a strong contender.

Online Community Managers: If you run a forum, a large Slack group, a Discord server, or any platform where users interact heavily, this is for you. Managing discussions, preventing toxicity, and keeping spam out is your daily grind. Perspective API makes that job way less painful.

Platform Developers: Building a new social network, a content platform, a game with chat features, or anything that accepts user input? Integrating moderation early is crucial. Perspective API offers a powerful, scalable solution right out of the gate.

News Outlets and Publishers: Comments sections can be a minefield. Filtering out abusive comments while allowing legitimate debate is tough. Perspective API helps news sites manage comment quality at scale, protecting their brand and their audience.

E-commerce Sites with Reviews/Q&A: Spam reviews, fake testimonials, inappropriate questions. These dilute trust and make your site look bad. Use the API to flag irrelevant or harmful user-generated content on product pages.

Gaming Companies: In-game chat, forums, community hubs. Gaming communities are known for intense communication, sometimes crossing lines. Protecting players from harassment is a top priority. Google Perspective API provides tools to do this automatically.

Social Media Platforms (of any size): Even small, niche social platforms need moderation. If you’re building one, you’ll eventually face the challenge of user-generated content. Starting with an AI assist like Perspective API is smart.

Agencies Providing Moderation Services: If you’re an agency offering content moderation to clients, integrating AI tools like Google Perspective API can increase your team’s efficiency and allow you to handle more clients or larger volumes without proportionally increasing staff.

Basically, anyone whose business relies on user-generated content and who struggles with the volume and nature of that content.

If manual moderation is your bottleneck, or if your platform’s quality is suffering because of spam or toxicity, Google Perspective API is definitely worth looking into.

It’s not for someone who just has a simple blog with occasional comments.

It’s for those facing a significant scale problem in Security and Moderation.

You need developers to integrate it.

You need to understand the data it provides and how to set rules based on it.

But if you fit the profile, it can be a game-changer.

How to Make Money Using Google Perspective API

Okay, you’ve got this powerful tool.

How do you turn that into cash?

It’s an API, a backend tool, not a direct money-making app like a content generator you sell services with.

But you can leverage its capabilities to build revenue streams or increase profitability.

- Offer Moderation-as-a-Service:

You can build a service on top of Perspective API.

Clients (like small forums, blogs, or platforms without dedicated dev teams) need moderation but can’t integrate complex APIs themselves.

You create a service where they feed you their content (via an integration you build or simply submitting it), you run it through Google Perspective API, and return moderated content or flags.

You charge a fee for this service, covering your API costs and adding your margin.

This works well for platforms that need help but aren’t large enough for enterprise solutions.

- Build and Sell Moderation Plugins/Integrations:

Many popular platforms (WordPress, Discourse, custom-built sites) could benefit from better moderation.

You can develop plugins or modules that integrate Google Perspective API into these platforms.

Sell the plugin code or offer it as a subscription service.

For example, a WordPress plugin that automatically checks comments using Perspective API before they are published.

Or a Discord bot that uses the API to moderate chat messages.

There’s a market for tools that add powerful AI capabilities to existing systems easily.

- Improve Your Own Platform’s Value Proposition:

If you use Google Perspective API to make your own online platform cleaner and safer, that’s a direct boost to your business.

A platform known for being free of spam and toxicity attracts and retains more users.

More users mean more engagement, which can translate to higher ad revenue, more subscriptions, or simply a more valuable community.

Think of it as investing in platform quality, which pays off financially.

A clean environment makes your platform more desirable than competitors who struggle with moderation.

- Offer Consulting on AI Moderation:

Businesses are increasingly looking into AI for moderation but don’t know where to start.

If you become an expert in using and integrating tools like Google Perspective API, you can offer consulting services.

Help companies assess their moderation needs, choose the right AI tools, plan the integration, and set up the rules and thresholds.

Your expertise in this specific niche of AI application is valuable.

Here’s a simple example:

Someone like Jane, who runs a community forum for small business owners, was drowning in spam and off-topic self-promotion.

She couldn’t hire a full-time moderator.

She found a service that used Google Perspective API in the backend.

The service cost her $50 a month.

Before, she spent 2 hours *every day* cleaning up junk.

That’s 60 hours a month.

At her typical hourly rate for consulting, that’s easily $3000 worth of her time.

After implementing the service powered by Google Perspective API, she spent maybe 1 hour *a week* checking the flagged content.

That’s 4 hours a month.

She saved 56 hours of her time.

Time she could use for billable client work or growing her core business.

The $50 service fee resulted in potentially thousands of dollars in saved time and increased productivity.

That’s not direct money *from* the API, but money earned *because* of the efficiency and quality it enabled.

So, it’s not just about using the API; it’s about how you build services, products, or operational efficiencies around it.

Limitations and Considerations

Nothing is perfect.

And while Google Perspective API is powerful, it has limitations.

You need to be aware of these before you commit.

Accuracy is Not 100%: AI models make mistakes.

They can have false positives (flagging harmless content as toxic) and false negatives (missing harmful content).

Sarcasm, cultural nuances, slang, and rapidly evolving language can confuse the models.

A comment intended as a joke might get flagged.

A subtly abusive comment might slip through.

This is why human oversight is still needed, especially for actions like banning users.

AI assists, it doesn’t replace human judgment entirely, at least not yet.

Bias in Training Data: AI models learn from data.

If the data used to train Google Perspective API has biases (e.g., reflects societal biases against certain groups), the API might inadvertently flag content related to those groups more often.

Google has done work to mitigate this, particularly with attributes like “Identity Attack,” but it’s a known challenge in AI.

Be mindful of potential bias and monitor how the API performs across different topics and communities.

Language Limitations: The API works best for English text.

While Google is working on expanding language support, the performance and available attributes might vary significantly for other languages.

If your platform is primarily in a language other than English, you need to check the current language support carefully.

Integration Requires Technical Expertise: This isn’t a tool your average user can install and run.

It’s an API designed for developers.

You need programming skills to integrate it into your platform, send requests, receive and process the responses, and build the logic for moderation actions based on the scores.

There’s a learning curve if your team isn’t familiar with API integrations and machine learning concepts.

Defining Thresholds: Setting the right score thresholds for action (e.g., at what score do you hide a comment?) requires experimentation and careful consideration of your community’s norms and rules.

Setting thresholds too high lets too much bad content through.

Setting them too low results in over-moderation and false positives, frustrating users.

This tuning process takes time and monitoring.

Cost at Scale: While pay-per-use is flexible, the costs can become substantial at very high volumes.

You need to factor this into your budget and projections.

Ensure the value you get (cleaner platform, saved human hours) outweighs the API costs.

Understanding these limitations is key.

Google Perspective API is a powerful tool, but it’s one piece of a larger moderation strategy.

It works best when combined with human review, clear community guidelines, and user reporting tools.

It automates the obvious, highlights the questionable, and lets your human team focus on the complex decisions.

Manage your expectations – it’s not a magic bullet that solves all moderation problems instantly.

Final Thoughts

So, where does that leave us with Google Perspective API?

If you’re running an online platform or managing user-generated content and you’re fighting a losing battle against spam and toxicity, you need to look at AI.

And Google Perspective API is one of the most robust, well-supported options available specifically for this job.

It’s not a simple tool.

It requires integration.

It requires thought about how you’ll use the scores it provides.

But the potential benefits?

Huge.

Saving countless hours of manual moderation.

Creating a safer, cleaner, more inviting space for your users.

Protecting your brand reputation.

Scaling your moderation efforts without needing a massive team.

The dedicated “Spam” attribute is a direct attack on one of the most annoying problems online.

The granular toxicity scores help you handle the nuanced problem of harmful speech.

Is it perfect? No.

AI still has its blind spots.

You’ll still need humans in the loop.

But it takes the grunt work.

It handles the sheer volume.

It frees your team to focus on the trickier stuff and on building your community.

For platforms facing significant Security and Moderation challenges, particularly related to Spam Detection and Content Moderation, investing in integrating Google Perspective API is a smart move.

It’s about working smarter, not just harder.

It’s about using technology to solve problems that technology helped create (like the scale of online content).

My recommendation?

If you fit the user profile – you have significant user-generated content and moderation is a pain point – explore it.

Start with the free tier.

Get your development team to do a proof of concept.

Send some of your real content through the API.

See the scores.

Understand how it interprets your specific type of content.

Figure out the thresholds that make sense for your community guidelines.

Then, build it into your workflow incrementally.

Start by flagging content for review.

Then maybe auto-hiding the most egregious stuff.

Monitor its performance.

Adjust your rules.

It’s an ongoing process, but the potential upside in terms of platform health and operational efficiency is immense.

Don’t keep fighting spam and toxicity with outdated manual processes.

Leverage AI.

Leverage Google Perspective API.

Visit the official Google Perspective API website

Frequently Asked Questions

1. What is Google Perspective API used for?

Google Perspective API is used to analyse text, such as comments or posts, and score it based on perceived impact on a conversation, specifically focusing on toxicity and other harmful attributes like insults, threats, and spam. It helps platforms automate content moderation.

2. Is Google Perspective API free?

Google Perspective API offers a free tier with a certain number of requests per month, which is good for testing and small-scale use. Beyond the free tier, it operates on a pay-per-use model based on the number of API calls you make.

3. How does Google Perspective API compare to other AI tools?

Google Perspective API is specifically tuned for detecting toxicity and harmful attributes in text, unlike more general-purpose language models. It’s designed for high-volume, real-time content moderation and comes with dedicated attributes like ‘Spam’ which are highly relevant for Security and Moderation tasks.

4. Can beginners use Google Perspective API?

The Google Perspective API is designed for developers and requires programming knowledge to integrate into existing platforms or build applications around it. It’s not a simple drag-and-drop tool for beginners without technical skills.

5. Does the content created by Google Perspective API meet quality and optimization standards?

Google Perspective API doesn’t create content; it analyses existing text. Its purpose is to help improve the *quality* of user-generated content on a platform by identifying and flagging or removing low-quality, spammy, or harmful contributions. It’s a tool for moderation, not content generation.

6. Can I make money with Google Perspective API?

While Google Perspective API itself doesn’t directly earn money, you can leverage its capabilities to make money. This includes offering moderation-as-a-service built on the API, developing and selling plugins that integrate it into platforms, improving your own platform’s value through better moderation, or offering consulting services on AI moderation implementation.