AWS Rekognition Unsafe Content Detection is key for Visual Content Filtering. It boosts efficiency and protects your brand. Get started today and moderate smart!

Say Goodbye to Manual Visual Content Filtering – Hello AWS Rekognition Unsafe Content Detection

Let’s talk reality.

You’re in the game of online platforms, marketplaces, social media.

Content is flying in from everywhere.

Photos, videos, you name it.

And guess what?

Not all of it is safe for work.

Or safe for your users.

You’ve got a massive problem on your hands: how do you find and stop the bad stuff without spending a fortune or hiring a small army?

Manual moderation?

It’s slow.

It’s expensive.

And frankly, it’s soul-crushing work.

Burnout is real.

Errors happen.

Bad content slips through.

Your brand takes a hit.

Your users lose trust.

It’s a nightmare scenario many face daily.

AI is changing the game.

It’s not just for generating text or images anymore.

AI is stepping into the trenches of Security and Moderation.

Specifically, when it comes to visual content.

Finding that needle in the haystack of millions of images and videos.

That’s where AWS Rekognition Unsafe Content Detection comes in.

It’s not a magic bullet.

But it’s closer than anything else I’ve seen.

This tool is built to tackle the exact problem you’re facing.

Filtering visual content at scale.

Automatically.

Fast.

It identifies inappropriate, offensive, or unwanted content.

Think nudity, violence, hate symbols, and more.

And it does it with machine learning.

This isn’t just another AI gimmick.

It’s a serious tool for serious businesses.

Businesses that need to keep their platforms clean.

Their users safe.

Their reputation solid.

So, how does it work?

What can it really do?

And is it the right fit for your moderation needs?

Let’s break it down.

Table of Contents

- What is AWS Rekognition Unsafe Content Detection?

- Key Features of AWS Rekognition Unsafe Content Detection for Visual Content Filtering

- Benefits of Using AWS Rekognition Unsafe Content Detection for Security and Moderation

- Pricing & Plans

- Hands-On Experience / Use Cases

- Who Should Use AWS Rekognition Unsafe Content Detection?

- How to Make Money Using AWS Rekognition Unsafe Content Detection

- Limitations and Considerations

- Final Thoughts

- Frequently Asked Questions

What is AWS Rekognition Unsafe Content Detection?

Okay, first up, what exactly is this thing?

AWS Rekognition is an Amazon Web Services tool.

It’s an AI service that analyses images and videos.

It can do a lot of stuff.

Identify objects, people, text, activities.

Recognise faces.

But one of its most powerful capabilities is detecting unsafe content.

That’s the part we’re focusing on.

“Unsafe Content Detection” within Rekognition is a specific model.

It’s trained on massive datasets.

Its job?

To spot things that violate community guidelines or laws.

Think about it.

Nudity, sexually suggestive content, graphic violence, hate speech visualisations, potentially illegal activities.

It’s built for platforms that host user-generated content.

Social networks.

Online marketplaces.

Gaming platforms.

Dating apps.

Anywhere people can upload images or videos.

Its target audience is pretty clear: anyone responsible for moderating visual content at scale.

Developers building moderation pipelines.

Moderation teams trying to handle huge volumes.

Businesses that need to protect their brand reputation.

Legal and compliance teams worried about regulations.

It provides a score, a confidence level, for different categories of unsafe content.

It doesn’t just say “this is bad.”

It says “this looks like nudity with 95% confidence.”

Or “this might be violence with 70% confidence.”

This allows for nuanced handling.

You can set thresholds.

Automatically remove content above a certain score.

Flag content below that for human review.

It’s a crucial layer in a robust moderation strategy.

It automates the heavy lifting.

Frees up human moderators for the complex edge cases.

It’s not a human replacement.

It’s a human superpower.

Key Features of AWS Rekognition Unsafe Content Detection for Visual Content Filtering

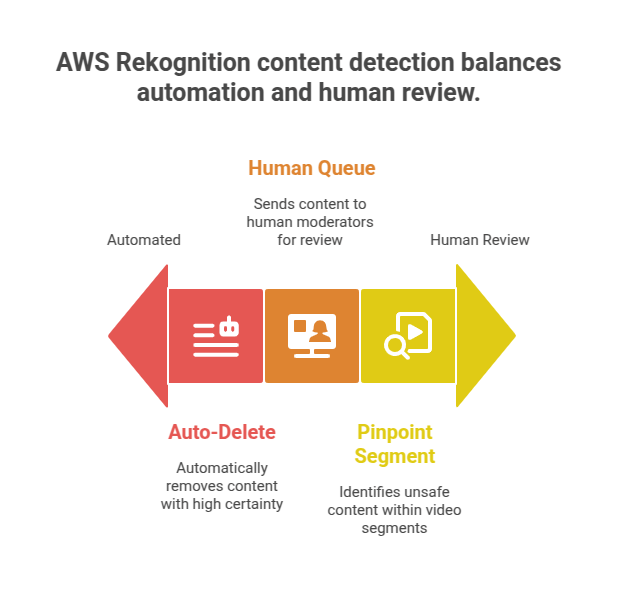

- Detailed Category Classification: This isn’t a blunt instrument. It classifies detected unsafe content into specific categories. We’re talking explicit nudity, suggestive nudity, graphic violence, physical violence, hate symbols, etc. For Visual Content Filtering, this granularity is gold. You can apply different rules to different types of content. High confidence graphic violence? Auto-delete. Low confidence suggestive image? Send to a human queue. It gives you control. You’re not just flagging “bad stuff”; you’re identifying exactly what kind of bad stuff it is. This helps refine your policies and understand the trends of content abuse on your platform.

- Confidence Scores: Every detection comes with a confidence score. A percentage. How sure is the AI that this content matches a specific unsafe category? This is crucial for building a workflow. High confidence (say, 95%+) can trigger automatic actions – removal, user warning. Lower confidence (maybe 60-95%) can send the image or video segment to a human moderator for review. This saves your human team from sifting through millions of obvious violations. They focus on the trickier cases the AI isn’t 100% sure about. It makes your moderation resources go way further. You filter based on risk tolerance.

- Video Analysis: It’s not just images. AWS Rekognition can analyse video streams and stored videos. This is huge for platforms with video content. It identifies unsafe content within specific segments of the video. You get timestamps. This means you don’t have to remove an entire hour-long video because of 10 seconds of policy violation. You can pinpoint the exact moment. This makes content moderation less disruptive for legitimate creators. It also allows for more efficient human review – they jump right to the flagged segment. It handles various video formats and sizes, scaling with your video volume.

Benefits of Using AWS Rekognition Unsafe Content Detection for Security and Moderation

Alright, so you know what it is and some of its features.

But what’s the real payoff?

Why should you actually use it for your Security and Moderation efforts?

First off, speed.

AI is ridiculously fast.

It can scan thousands, even millions, of images and hours of video in the time it takes a human to review a few dozen.

This means you can detect and act on harmful content almost instantly.

Before it goes viral.

Before it causes real damage to users or your brand.

That’s a massive win.

Second, scale.

Your platform is growing?

User-generated content volume is exploding?

Hiring more moderators is expensive and slow.

AWS Rekognition scales with you.

You pay for what you use.

Need to process ten thousand images today?

A million tomorrow?

The infrastructure is there, ready.

No hiring bottlenecks.

No training periods.

Third, consistency.

Human judgment can vary.

What one moderator flags, another might miss.

Or interpret differently based on fatigue or bias.

AI, while not perfect, applies the same rules, the same model, to every piece of content.

This leads to more consistent application of your content policies.

Users know what to expect.

Moderation decisions are more predictable.

Fourth, cost reduction.

Automating the bulk of the review process significantly reduces the need for large human moderation teams.

Human moderators are valuable, but they are an expensive resource.

By letting AI handle the clear-cut cases, you can reduce operational costs.

Your human team can focus on the complex, nuanced, or borderline cases where AI needs help.

Fifth, improved moderator well-being.

Reviewing harmful content is mentally taxing.

Exposure to graphic material leads to stress, burnout, and psychological impact.

By filtering out the most explicit and harmful content automatically, you reduce the volume of disturbing material your human moderators are exposed to.

This improves their working conditions and can lead to less turnover.

Finally, enhanced user trust and safety.

A clean platform is a safe platform.

When users feel safe, they engage more.

They trust your brand.

Removing harmful content quickly protects your users, especially vulnerable ones, from exposure to disturbing material.

This builds a positive community and strengthens your platform’s reputation.

These aren’t small benefits.

They are fundamental to running a successful and responsible online platform today.

Pricing & Plans

Alright, the burning question: how much does this cost?

AWS services, including Rekognition, typically follow a pay-as-you-go model.

There’s no big upfront license fee.

You pay based on your usage.

Specifically for Unsafe Content Detection, pricing is based on the number of images analysed or the duration of video analysed.

For images, it’s usually priced per thousand images processed.

For videos, it’s priced per minute of video processed.

There’s a free tier.

This is crucial.

You can try it out, test it with your own content, and see how it performs before committing any significant cash.

The free tier usually includes a certain number of image analyses and video minutes per month for the first few months.

This is more than enough to build a proof of concept or use for a small-scale application.

Beyond the free tier, the cost per image or video minute is quite low.

It becomes very cost-effective at scale compared to manual review.

AWS also offers volume discounts.

The more you process, the cheaper the per-unit cost becomes.

This makes it attractive for platforms with massive content volume.

How does this compare to alternatives?

Other cloud providers (Google Cloud, Microsoft Azure) have similar AI moderation services.

Their pricing models are broadly similar – pay-per-use, often with a free tier.

Proprietary or in-house solutions?

Building your own AI moderation system from scratch is incredibly expensive and complex.

It requires deep expertise in machine learning, massive datasets for training, and significant computational resources.

Outsourcing to a dedicated moderation service?

This can be faster to get started but might lack the customisation and direct integration you get with a cloud service like Rekognition.

And the cost per item reviewed is often higher than using an automated AI service.

AWS Rekognition positions itself as a highly scalable, cost-effective, and developer-friendly option.

You can integrate it directly into your existing workflows using APIs.

You only pay for what you use, which is great for variable workloads.

For most businesses dealing with user-generated content, the pricing model makes sense.

Start small with the free tier.

Scale up as your needs grow, and the per-unit cost decreases.

It’s designed to be accessible and grow with your business.

Hands-On Experience / Use Cases

Alright, let’s get practical.

How does this actually play out in the real world?

Imagine you run a rapidly growing online marketplace.

Users are uploading thousands of product images every day.

Your small moderation team is drowning.

They’re missing things.

You’re seeing listings with inappropriate images pop up, which is bad for business and trust.

Before AWS Rekognition, you had humans manually eyeball every single image.

Slow.

Inefficient.

Expensive.

With Rekognition, you integrate its API into your upload pipeline.

Every time a user uploads an image, it’s sent to Rekognition for analysis.

The API call is simple.

You get a response back in seconds.

This response includes a list of detected unsafe content categories and confidence scores.

You set rules:

If nudity score > 90%, reject the image automatically and notify the user.

If violence score > 85%, same action.

If suggestive score is between 60% and 85%, flag the image for human review.

This transforms the workflow.

The AI handles the vast majority of obvious policy violations.

The human moderators now only see the borderline cases.

Their queue shrinks dramatically.

They can make faster, more focused decisions on the tricky stuff.

They are no longer spending hours looking at clear violations.

Another use case: a video-sharing platform.

Users upload hours of content daily.

Finding policy violations inside long videos manually is nearly impossible.

Rekognition’s video analysis capability is a game-changer here.

You submit the video to Rekognition.

It processes the video asynchronously.

It returns a list of detected unsafe content segments with timestamps.

“Explicit Nudity detected from 0:45 to 0:52 with 98% confidence.”

“Graphic violence detected from 2:10 to 2:15 with 90% confidence.”

This allows you to take precise action.

You can automatically blur or remove just that specific segment of the video.

Or send the human moderator directly to that timestamp for review.

It saves countless hours of manual scrubbing through video.

The results?

Much faster detection of harmful content.

Significantly reduced workload for human moderators.

Improved consistency in moderation decisions.

A cleaner, safer platform for users.

These aren’t hypothetical.

Companies use AWS Rekognition for exactly these kinds of scenarios every day.

It’s a tool built for the reality of moderating content at scale in the modern web.

Who Should Use AWS Rekognition Unsafe Content Detection?

So, is this tool for everyone?

Probably not.

If you run a simple blog where you upload all the images yourself, and they’re all pictures of cats, you probably don’t need AI moderation.

But if your platform involves user-generated visual content…

Then you need to pay attention.

Here’s who AWS Rekognition Unsafe Content Detection is really for:

Social Media Platforms: Obvious one. High volume of images and videos. Need to enforce community guidelines rigorously. Protecting users is paramount.

Online Marketplaces: Millions of product images uploaded by third-party sellers. Need to prevent illegal or inappropriate items from being listed. Brand reputation is directly tied to the safety of listings.

Gaming Platforms: User profiles, in-game content creation, live streaming. Visual communication is key. Need to moderate user-uploaded avatars, screenshots, or stream content for violations like hate speech or explicit material.

Dating Apps: Profile pictures and shared images. Need to quickly identify nudity or other inappropriate content to ensure a safe environment for users. User safety is core to the service.

Hosting Providers/CDNs: Companies storing or serving large volumes of user files. Need to scan for illegal or harmful content proactively to avoid legal issues and maintain service integrity.

Community Forums with Image/Video Uploads: Any forum or community site where users can share visual media. Need automated checks to keep discussions clean and on-topic, preventing the spread of harmful content.

EdTech Platforms: Platforms where students might upload projects or share media. Need to ensure content is appropriate for educational environments and protects minors.

Any App or Service Accepting User-Generated Visual Content: If your business relies on users providing images or videos, you have a moderation challenge. From fitness apps with progress photos to review sites with visual feedback, unsafe content can appear anywhere.

Essentially, if you have a scalable problem with Visual Content Filtering and need an automated, cost-effective way to tackle it, Rekognition is a strong candidate.

It’s particularly well-suited for developers and technical teams who can integrate its APIs into their existing infrastructure.

You don’t need to be an AI expert to use it, but you do need technical capability to implement it.

It’s less of a plug-and-play tool for small creators and more of a foundational service for platforms and large-scale applications.

How to Make Money Using AWS Rekognition Unsafe Content Detection

Okay, forget just using it internally.

Can you actually make money *with* AWS Rekognition Unsafe Content Detection?

Absolutely.

If you’re savvy and understand the pain points of businesses dealing with content moderation, this tool opens up opportunities.

Here’s how you could potentially turn AWS Rekognition into a revenue stream:

- Offer Content Moderation as a Service: This is the most direct route. Many small to medium-sized platforms, or even larger ones without dedicated in-house AI teams, struggle with moderation. You can build a service on top of AWS Rekognition. Offer a platform where businesses upload their images/videos, and your service uses Rekognition to scan for unsafe content based on their policy rules. You provide them with reports, flagged content, or automated removal services. You charge them per image, per video minute, or a subscription fee based on volume. You act as the expert integrator and service provider.

- Build and Sell Moderation Plugins or Integrations: Many platforms use existing content management systems (CMS), forum software, or e-commerce platforms. These platforms might not have robust built-in AI moderation. You can build plugins or extensions that connect these platforms directly to AWS Rekognition. For example, a WordPress plugin that scans images uploaded to blog posts or a Shopify app that checks product images. You sell these plugins or charge a subscription for access.

- Provide Consulting and Implementation Services: AWS Rekognition is powerful, but integrating it requires technical expertise. Businesses might need help setting up the service, defining moderation policies, building the automated workflows, and integrating it into their existing applications. You can offer your services as an expert. Help them design their moderation architecture, write the necessary code, and deploy the solution on AWS. You charge for your time and expertise. This is especially valuable for companies new to cloud services or AI.

Think about the value you provide.

You’re saving businesses massive headaches and costs associated with manual moderation.

You’re protecting their brand and users.

You’re enabling them to scale without scaling their moderation team proportionally.

Your charge is a fraction of the cost they’d incur otherwise.

Let’s say you build a service for small e-commerce sites.

They upload 10,000 product images a month.

Rekognition costs you pennies per thousand images.

You charge the e-commerce site a flat fee or per-image fee that covers your Rekognition costs, your platform costs, and your profit margin.

Maybe you charge $0.05 per image scan.

That’s $500 a month per client for scanning 10,000 images.

If Rekognition costs you, say, $0.001 per image, your cost is $10.

That leaves a healthy margin for your service.

Scale that to dozens or hundreds of clients.

The key is to package the raw power of Rekognition into an easy-to-consume service or product for businesses that need moderation but don’t want to build the infrastructure themselves.

You’re selling the solution, not just the tool.

Limitations and Considerations

Okay, let’s keep it real.

No tool is perfect.

AWS Rekognition Unsafe Content Detection is powerful, but it has limitations you need to be aware of.

First, Accuracy Isn’t 100% Perfect. It’s AI. It makes mistakes. It might misclassify something. It might miss something. It gives confidence scores for a reason. Content that is heavily artistic, abstract, or cleverly disguised might not be caught. Edge cases are challenging for any AI. This is why human review is still essential, especially for content flagged with lower confidence scores.

Second, Defining Policies is Up to You. Rekognition identifies categories of unsafe content. But deciding what confidence score is “unsafe” for *your* platform is your responsibility. A platform for artists might have a different threshold for nudity than a platform for families. You need clear internal policies to map Rekognition’s output to your specific rules.

Third, Context Matters, and AI Struggles with Context. An image of a historical statue might contain nudity but isn’t violating policy. An image of a medical procedure might contain graphic content but is legitimate. Rekognition flags the visual elements. It doesn’t understand the context or intent behind the image or video. This is another crucial area where human review is necessary to avoid false positives.

Fourth, Integration Requires Technical Effort. This isn’t a consumer app you download and click “Scan.” It’s a cloud service with APIs. You need developers to integrate it into your platform’s backend, set up the data flow, handle the responses, and build the associated workflows (like sending items for human review). There’s an upfront development cost and ongoing maintenance.

Fifth, Cost Can Scale Rapidly with Volume. While the per-unit cost is low, if you suddenly experience a massive surge in user uploads, your costs can jump significantly. You need to monitor your usage and budget accordingly. Implement safeguards to prevent unexpected spending.

Sixth, Compliance and Regional Differences. What’s considered “unsafe” can vary significantly by country and region. AWS Rekognition provides categories, but you need to ensure your moderation policies align with local laws and cultural norms where your users are located. The AI itself doesn’t automatically adapt to these nuances.

Finally, Potential for Bias. AI models are trained on data. If the training data has biases, the model might exhibit those biases in its classifications. While AWS works to mitigate this, it’s a general concern with AI and something to be aware of when relying on automated systems. Regular auditing of flagged content can help identify potential bias issues.

Using AWS Rekognition Unsafe Content Detection is a strategic decision.

It’s a powerful tool to automate and scale moderation.

But it works best as part of a comprehensive moderation strategy that still includes human oversight for nuance, context, and tricky edge cases.

Don’t expect it to be a magic “fix all” button.

Expect it to be a force multiplier for your moderation efforts.

Final Thoughts

Look, dealing with unsafe visual content online is hard.

It’s a non-negotiable part of running a responsible platform.

Ignoring it is a recipe for disaster – for your users, your brand, and potentially legally.

Manual moderation simply doesn’t scale in today’s content environment.

You need leverage.

AWS Rekognition Unsafe Content Detection provides that leverage.

It’s not the only tool out there, but it’s one of the most robust and widely used, backed by the power of AWS infrastructure.

It excels at the heavy lifting.

Rapidly scanning massive volumes of images and videos.

Identifying common types of harmful content with high confidence.

Freeing up your human moderators to focus on the difficult decisions where human judgment is irreplaceable.

It helps you react faster to emerging content trends.

Maintain consistency in your policies.

And ultimately, build a safer environment for your users.

Is it worth looking into?

If your platform accepts user-generated visual content, absolutely.

Start with the free tier.

Test it with your own data.

See how well it identifies content against your specific policies.

Figure out the workflow for integrating it and handling the flagged content.

Think about how you’ll combine AI automation with human review.

This tool can dramatically improve your Security and Moderation posture.

It can save you time, money, and stress.

It’s a smart investment for any business serious about content moderation.

Don’t get left behind managing content manually.

The future of content moderation is a smart blend of AI and human expertise.

AWS Rekognition is a key piece of that puzzle.

Visit the official AWS Rekognition website

Frequently Asked Questions

1. What is AWS Rekognition Unsafe Content Detection used for?

It’s primarily used for automatically identifying inappropriate, offensive, or harmful visual content (images and videos) at scale.

This includes categories like nudity, violence, hate symbols, and more.

It helps online platforms, marketplaces, and apps moderate user-generated content efficiently.

2. Is AWS Rekognition Unsafe Content Detection free?

AWS Rekognition operates on a pay-as-you-go model.

However, they offer a free tier that allows you to process a certain number of images and video minutes per month for a trial period.

Beyond the free tier, you pay based on your volume of usage.

3. How does AWS Rekognition compare to other AI tools?

It’s one of several leading cloud-based AI services for content moderation.

Competitors include Google Cloud Vision AI and Azure Content Moderator.

AWS Rekognition is known for its detailed classification, scalability, and deep integration within the AWS ecosystem.

The best choice often depends on your existing cloud infrastructure and specific needs.

4. Can beginners use AWS Rekognition Unsafe Content Detection?

Using AWS Rekognition requires some technical knowledge.

It’s accessed via APIs, meaning you need programming skills to integrate it into your application or workflow.

It’s not a no-code or low-code tool for non-technical beginners directly.

5. Does the content created by AWS Rekognition Unsafe Content Detection meet quality and optimization standards?

AWS Rekognition doesn’t create content; it analyses existing visual content.

Its purpose is to help you identify content that *doesn’t* meet your quality or safety standards by flagging violations of your content policies.

Its analysis output is high-quality data (categories, scores) for moderation decisions.

6. Can I make money with AWS Rekognition Unsafe Content Detection?

Yes, you can.

You can build services, plugins, or offer consulting based on its capabilities.

Many businesses need help with content moderation and are willing to pay for solutions that leverage powerful tools like Rekognition.

You essentially package the AI’s power into a service for clients.