Google Cloud Vision Safe Search: your edge in Visual Content Filtering. Boost safety & efficiency now. Get started today!

Google Cloud Vision Safe Search Is Built for Security and Moderation – Here’s Why

Alright. Let’s talk about the internet.

Specifically, the images and videos flowing through it every second.

It’s a tidal wave.

And if you’re running a platform, a forum, or any online space where users can upload stuff…

That tidal wave can bring in some nasty junk.

We’re talking harmful content. Explicit stuff. Hate speech. Violence.

Stuff that can ruin your community. Damage your brand. Get you in hot water legally.

Historically, dealing with this meant hiring armies of human moderators.

Tough job. Emotionally draining. Not exactly cheap or fast.

Then came AI.

Not just some tech jargon. Real tools that can look at images and videos. Understand what’s in them. And flag the bad stuff. Fast.

This is where Google Cloud Vision Safe Search walks in.

It’s one of those AI tools built specifically for this problem.

Visual content filtering. Doing it right.

For anyone serious about Security and Moderation, this isn’t just a nice-to-have.

It’s essential.

Let’s break down exactly what it is and why it matters.

Table of Contents

- What is Google Cloud Vision Safe Search?

- Key Features of Google Cloud Vision Safe Search for Visual Content Filtering

- Benefits of Using Google Cloud Vision Safe Search for Security and Moderation

- Pricing & Plans

- Hands-On Experience / Use Cases

- Who Should Use Google Cloud Vision Safe Search?

- How to Make Money Using Google Cloud Vision Safe Search

- Limitations and Considerations

- Final Thoughts

- Frequently Asked Questions

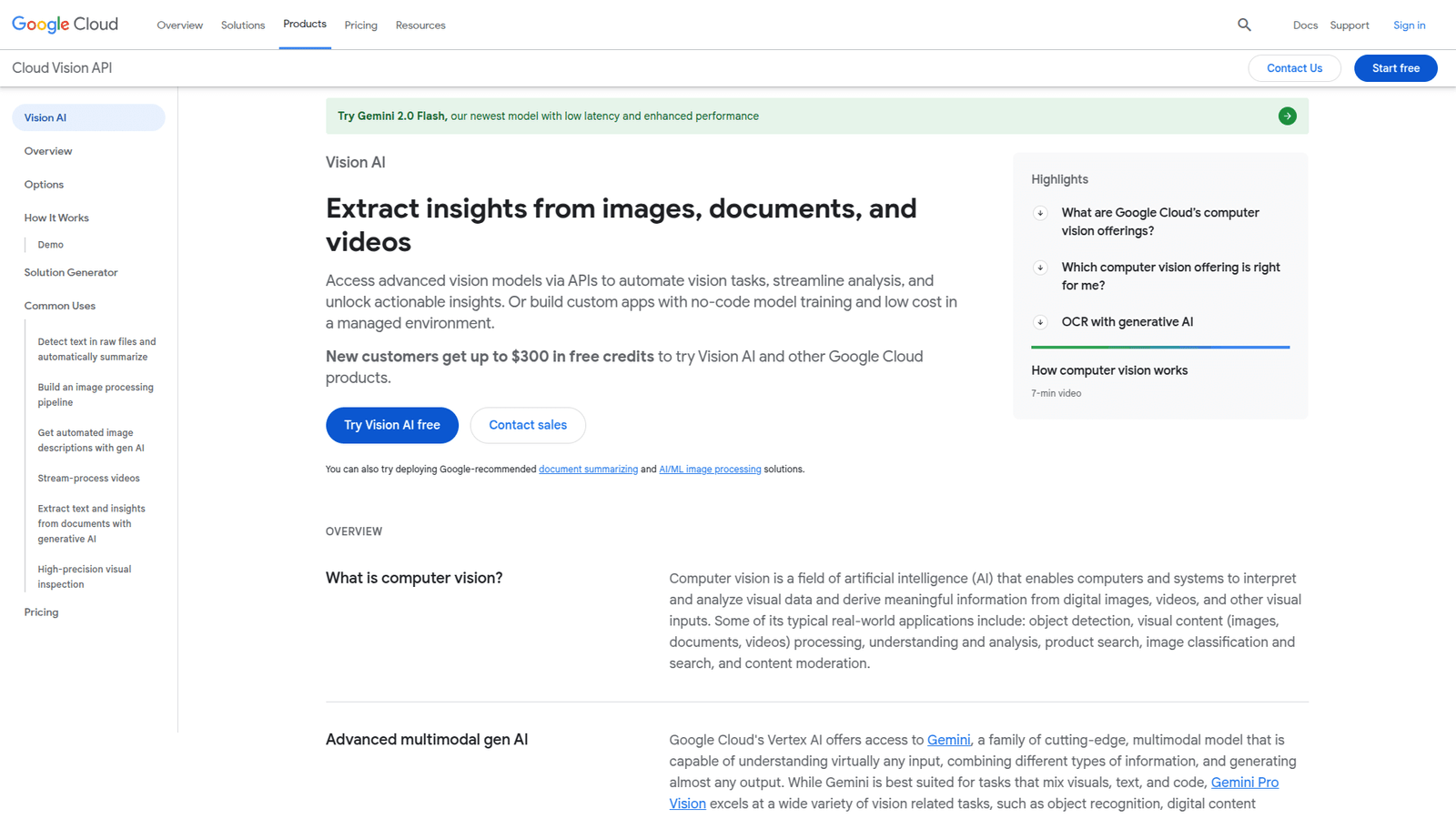

What is Google Cloud Vision Safe Search?

Okay, straight talk. What exactly is Google Cloud Vision Safe Search?

Think of it as an AI assistant that looks at pictures and decides if they’re safe for general viewing.

It’s part of Google Cloud Vision API, Google’s broader tool for image analysis.

But Safe Search has a specific job.

Its job is to detect various categories of potentially harmful or inappropriate content within images.

It gives you confidence scores for each category.

Scores like “VERY_UNLIKELY”, “UNLIKELY”, “POSSIBLE”, “LIKELY”, and “VERY_LIKELY”.

These scores tell you how probable it is that a certain type of inappropriate content is present in the image.

Who is this for?

Anyone dealing with user-generated images or videos online.

Social media platforms. E-commerce sites with product images. Gaming communities. Educational platforms. Forums.

If users can upload pictures, you need this.

It helps automate the first line of defence.

Sorting through millions of images manually? Impossible.

Using Safe Search? Suddenly possible.

It significantly reduces the volume of content human moderators have to review.

They can focus on the edge cases, the trickier stuff the AI flags as “Possible” or “Likely”.

Less burnout for your team. Faster removal of harmful content for your users.

It’s infrastructure for a safer online space.

And for Visual Content Filtering, it’s a core component.

Key Features of Google Cloud Vision Safe Search for Visual Content Filtering

So, what can this thing actually do? How does it help with Visual Content Filtering?

It breaks down potential problems into categories. Gives you granular control.

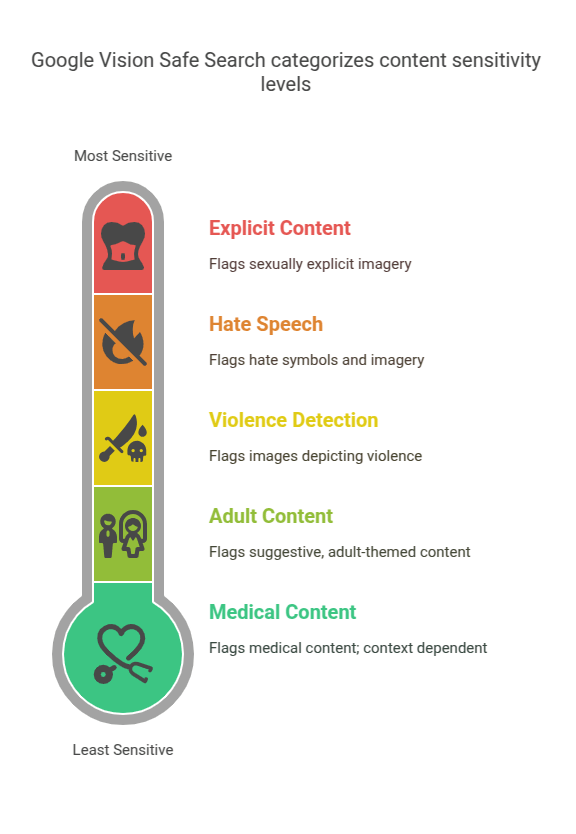

- Explicit Content Detection: This is the big one. It checks for sexually explicit imagery. Not just nudity, but suggestive content too. You get a score indicating the likelihood. This is crucial for maintaining a clean platform, especially if you have strict content guidelines. It’s the baseline filter for many platforms. Stops the worst stuff getting through immediately.

Think about dating apps or community forums. This is non-negotiable.

- Violence Detection: It looks for images depicting violence. This could be gore, fighting, weapons used violently, etc. Again, you get a likelihood score. Different platforms have different thresholds for violence, so these scores let you set your own rules. Keeps graphic content off your site, which is vital for user well-being and brand image. Prevents triggering content from reaching users.

Gaming platforms, news sites, forums discussing sensitive topics – they need this.

- Hate Speech Symbols Detection: This feature scans for imagery associated with hate groups or hate speech. This can be symbols, flags, or visual cues promoting discrimination or hate. Identifying this specific category is powerful for combating hate speech online, which often uses visual elements. Helps you proactively find and remove hateful content. It’s a targeted approach to a difficult problem.

Any platform with user interaction needs to be vigilant about hate speech.

- Medical Content Detection: It flags images that might contain medical procedures, injuries, or anatomical content that some users might find sensitive or disturbing. This is particularly useful for platforms where such imagery is only appropriate in specific contexts (e.g., medical forums, but not general social feeds). Gives you a heads-up on potentially sensitive visuals. Allows for content gating or specific channel moderation.

Health forums or educational sites might allow this, others won’t. You decide based on the score.

- Adult Content Detection: A broader category than just explicit, this can include content that is suggestive or adult-themed but not necessarily explicit. Helps capture a wider range of potentially inappropriate content. Allows for a layered approach to content filtering. Useful for platforms with users of varying ages.

Maybe you allow some suggestive content but draw a line at explicit. This helps you differentiate.

- Control and Thresholds: You don’t just get a “yes” or “no”. You get those likelihood scores. This means you can define your own tolerance levels. For a children’s platform, you might block anything above “UNLIKELY” for most categories. For a news site, you might only block “VERY_LIKELY” violence but allow “POSSIBLE”. This flexibility is key. It’s not one-size-fits-all filtering. You set the rules based on your community and guidelines.

Your platform, your rules. The tool just gives you the data points.

These features combined give you a powerful toolkit.

It’s not just about finding *a* problem.

It’s about identifying *specific types* of problems with a confidence score.

This data lets you automate rejection, send items for human review, or apply different moderation actions based on the severity and type of content detected.

It’s the intelligence layer your moderation workflow needs.

Benefits of Using Google Cloud Vision Safe Search for Security and Moderation

Alright, so you know what it does. But what’s the payoff? Why should you even bother?

Simple. It makes your Security and Moderation efforts way, way better.

Speed: AI is fast. Really fast. It can process thousands, even millions, of images in the time it takes a human to look at a few dozen. This means harmful content is flagged and removed much quicker. Less exposure time for your users. Less time for bad actors to cause trouble.

Think about a viral image spreading. Manual review? Too slow. AI can catch it early.

Scale: Your user base grows? The amount of uploaded content explodes? Manual moderation doesn’t scale easily or cheaply. You need more people, more training, more desk space. Google Cloud Vision Safe Search scales with your infrastructure. You just send more images through the API. It handles the load. This is crucial for growing platforms. Avoids bottlenecking your growth with moderation limits.

Imagine hitting a user growth spurt without scaling your moderation. Disaster. AI solves that.

Consistency: Humans are great, but they’re also… human. They get tired. They have bad days. Their judgment can vary. AI is consistent. It applies the same rules, the same models, every single time. This leads to more uniform moderation decisions. Reduces the potential for bias or error introduced by fatigue. Ensures a fair and predictable user experience regarding content rules.

Consistent rules build trust in your platform. Inconsistent ones breed frustration.

Cost Savings: Building and maintaining a large human moderation team is expensive. Salaries, benefits, training, management overhead. Automating the initial filtering with AI significantly reduces the workload on your human team. They can be smaller, more specialised, and focused on the tricky cases AI can’t confidently decide on. Lowers operational costs significantly over time. Frees up budget for other critical areas.

It’s not about replacing people entirely, but making the people you have much more effective.

Improved User Safety: This is the ultimate goal, right? By catching harmful content faster and more reliably, you create a safer environment for your users. This protects vulnerable populations, enhances user trust, and encourages positive interactions. A safe platform is a successful platform. Reduces exposure to disturbing or illegal content. Builds a positive community reputation.

Users won’t stick around if they’re constantly seeing stuff that makes them uncomfortable or unsafe.

Brand Protection: Harmful user-generated content leaking onto your platform can seriously damage your brand reputation. News headlines about disturbing content, advertiser boycotts, user backlash. AI filtering acts as a shield. It helps prevent those incidents from happening in the first place. Protects your image and your relationships with partners and advertisers. Maintains a clean and professional online presence.

Your brand is your most valuable asset. Don’t let user content accidentally trash it.

These aren’t small wins. These are fundamental improvements to how you manage your online space.

It’s moving from reactive firefighting to proactive prevention.

Pricing & Plans

Alright, the money question. How much does this cost?

Google Cloud services, including Vision API and its Safe Search feature, typically operate on a pay-as-you-go model.

This means you pay based on your usage.

Specifically, you’re charged per image that you send to the API for analysis.

The pricing is tiered. The more images you process, the lower the cost per image gets.

There is usually a free tier.

The free tier allows you to process a certain number of images per month for free.

This is great for testing the service, running small-scale applications, or for businesses with low volume content uploads.

For example, the free tier often includes the first 1,000 units per month for Safe Search detection. (Check Google Cloud’s official pricing page for the absolute latest details, as these numbers can change).

Once you exceed the free tier limit, you start paying based on the per-image rate.

The rates are typically fractions of a cent per image.

For high-volume users, the cost per image drops further at higher tiers.

This model is really flexible.

You’re not locked into a huge monthly subscription if your usage is low or fluctuates.

You only pay for what you use.

How does this compare to alternatives?

Alternatives could include building your own image analysis system (hugely expensive and complex), using other cloud provider’s APIs (like AWS Rekognition or Azure Computer Vision), or relying purely on human moderation (as discussed, doesn’t scale well and is costly).

Compared to building your own, Google Cloud Vision is dramatically cheaper and faster to implement. You leverage Google’s massive R&D investment and infrastructure.

Compared to other cloud APIs, pricing can be competitive, and the specific model and features might suit your needs better. Google’s models are trained on vast datasets, often giving them an edge in accuracy for general content.

Compared to human moderation for initial filtering, it’s significantly more cost-effective at scale. The AI does the bulk work, humans handle the exceptions.

So, while it’s not “free” for significant use, the pay-as-you-go structure makes it accessible and cost-efficient compared to the problem it solves and the alternatives available.

Always check the official Google Cloud pricing page for the most current and detailed information before implementing. It’s a variable cost tied directly to your platform’s activity.

Hands-On Experience / Use Cases

Okay, enough theory. What’s it like to actually *use* Google Cloud Vision Safe Search?

And where can you apply it?

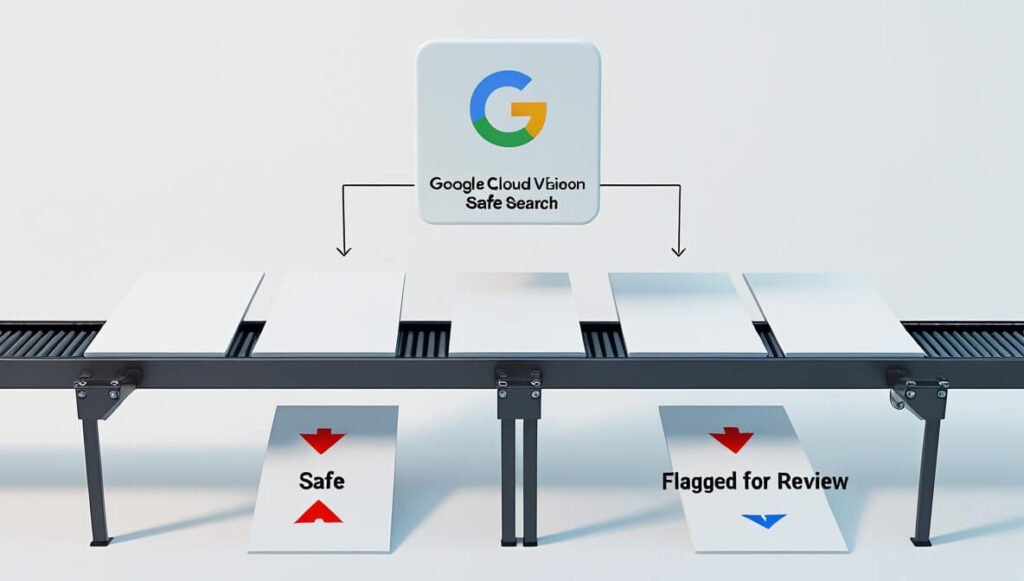

Using it is typically done via an API (Application Programming Interface).

This means your developers integrate it directly into your application or platform.

When a user uploads an image, your system sends that image (or a link to it) to the Google Cloud Vision API.

You specifically ask for the Safe Search detection feature.

The API crunches the numbers using its AI models.

It then returns a response, usually in a JSON format, detailing the likelihood scores for each Safe Search category (Adult, Spoof, Medical, Violence, Racy).

Your system then reads those scores.

Based on the thresholds you’ve set, it takes action.

If Adult score is “VERY_LIKELY” and your threshold is anything above “UNLIKELY”, the image is automatically rejected or quarantined.

If the Violence score is “POSSIBLE” and your threshold for automatic rejection is “LIKELY”, the image might be sent to your human moderation queue for a manual look.

This workflow is incredibly efficient.

Let’s run through some use cases:

Social Media Platforms: Users post millions of images daily. Automating the detection of explicit content, violence, and hate symbols is critical for safety and compliance. Safe Search can filter out a vast percentage of problematic content before it’s even seen by users. Reduces the workload on human moderators significantly. Ensures community standards are enforced at scale.

Example: A large social network uses Safe Search to scan every profile picture upload. Images flagged as “VERY_LIKELY” Adult are automatically rejected. Those flagged as “LIKELY” or “POSSIBLE” are sent for human review.

E-commerce Marketplaces: Sellers upload product images. You need to ensure these images are safe and appropriate for all shoppers. No explicit content, no violent imagery in product listings. Safe Search provides a fast check on millions of images. Protects the platform’s reputation and advertiser relationships. Ensures a family-friendly shopping environment.

Example: An online marketplace for handmade goods uses Safe Search to scan all listing photos. If an image scores high on Adult or Violence, the listing is automatically flagged for review before going live.

Online Gaming Communities: Gamers share screenshots and avatars. This can sometimes include inappropriate or offensive imagery. Google Cloud Vision Safe Search helps moderate these visual uploads. Maintains a positive and inclusive gaming environment. Reduces toxicity associated with harmful visual content.

Example: A popular online game allows users to upload custom avatars. Safe Search scans these uploads, blocking avatars flagged as inappropriate based on the Safe Search categories.

Educational Platforms: Protecting students from inappropriate content is paramount. If students or educators can upload images (e.g., for projects, discussions), Safe Search provides a layer of safety. Ensures compliance with child safety regulations. Creates a secure learning environment.

Example: A virtual classroom platform uses Google Cloud Vision Safe Search to scan images uploaded to shared project boards. This helps prevent accidental or intentional sharing of harmful visuals among students.

The experience for developers is standard API integration. For the business, it’s about setting policies based on the AI’s confidence scores. The results are faster filtering, less manual work, and a significantly safer platform.

Who Should Use Google Cloud Vision Safe Search?

Who is this tool actually built for?

If you manage any online platform, app, or service where users can upload images or videos, you should be looking at Google Cloud Vision Safe Search.

Seriously.

Let’s break down the ideal user profiles:

Social Media Platforms: From global giants to niche communities. If user feeds, profile pictures, or shared images are a core part of your service, filtering is non-negotiable.

Online Marketplaces and E-commerce: Any site where third-party sellers or users post images of products or items. Maintaining brand safety and appropriate content is crucial for sales and trust.

Gaming Platforms and Communities: User-generated content like avatars, screenshots, or shared artwork is common. Keeping this content clean improves the player experience and community health.

Forums and Discussion Boards: Image sharing is frequent. Preventing spam, offensive images, or illegal content is essential for maintaining a healthy discussion environment.

Dating Apps: Profile pictures and in-app image sharing require strict moderation to prevent explicit or inappropriate content.

Content Sharing Platforms (e.g., image hosting, video sharing): Any service built around users uploading visual media needs robust filtering to comply with laws and maintain a safe user base.

Cloud Storage Services with Sharing Features: If users can share image files publicly or semi-publicly, you might want to scan for harmful content to prevent abuse of your service.

Educational & Child-Focused Platforms: Protecting children from exposure to inappropriate content is a legal and ethical requirement. Strict filtering is necessary.

Businesses with User-Generated Content in Marketing: Running contests or campaigns where users submit photos? You need to moderate submissions before displaying them publicly.

Basically, if you are responsible for the content that appears on your platform, and a significant portion of that content is visual and comes from users, Google Cloud Vision Safe Search is built for you.

It’s for the developers building these platforms, the product managers defining content policies, and the Security and Moderation teams tasked with keeping the platform safe.

Google Cloud Vision Safe Search allows small teams to handle large volumes and large teams to work more efficiently and focus on complex moderation challenges.

It’s not just for tech giants. Any business with significant user-generated imagery can benefit from this automation and intelligence.

How to Make Money Using Google Cloud Vision Safe Search

Okay, can you actually make money with this tool?

Yes. Not directly by selling the tool itself, obviously.

But by using its capabilities to build services or improve your existing business.

Think efficiency. Think new service offerings.

Here’s how you can potentially leverage Google Cloud Vision Safe Search for profit:

- Offer Visual Moderation as a Service: Many smaller platforms, forums, or e-commerce sites don’t have the technical expertise or scale to integrate AI filtering themselves. You can build a service layer on top of Google Cloud Vision. You handle the API integration, the policy setting, and the reporting. They send you images, you return moderation results. This is a direct service business.

Target small to medium businesses (SMBs) who need content moderation but can’t build their own system. Charge a per-image fee or a monthly subscription based on volume.

- Build and Sell a Plugin or Extension: If you focus on a specific platform (like WordPress, Shopify, a specific forum software), you can build a plugin that integrates Google Cloud Vision Safe Search into user uploads for that platform. Sell the plugin or offer it as a subscription.

Many website owners need image moderation but don’t know how to code APIs. Your plugin provides a simple solution they can install and configure. Charge a licence fee or a recurring subscription for updates and support.

- Improve Efficiency of Your Own Platform: If you run a platform with user-generated content, using Google Cloud Vision Safe Search reduces the cost and time spent on manual moderation. This directly impacts your bottom line by lowering operational expenses. More efficient moderation means fewer human hours needed. Those saved labour costs are profit.

Reallocate your human moderators to higher-value tasks, like community engagement or policy refinement, instead of sifting through millions of obviously bad images. This makes your team more productive.

- Enhance Brand Safety for Advertisers: If your platform relies on advertising revenue, ensuring a brand-safe environment is critical. Advertisers won’t place ads next to harmful content. By implementing robust filtering with Google Cloud Vision Safe Search, you can confidently tell advertisers your platform is safe. This can help attract premium advertisers and increase your ad revenue.

Position your platform as a safe space for brands. Use your automated moderation capability as a selling point to advertising partners. Higher brand safety = higher ad rates.

- Develop Niche Filtering Solutions: The Safe Search categories are general. You could use the broader Google Cloud Vision capabilities (object detection, label detection, landmark detection) in combination with Google Cloud Vision Safe Search to create niche filtering services. For example, an AI service that specifically finds images of illegal products or copyrighted material, using Safe Search as a base layer for general inappropriateness.

Identify a specific problem area in visual content that isn’t fully addressed by standard tools and build a specialized service around it. Could be specific types of spam imagery, counterfeit goods, etc.

It’s not just about stopping bad stuff. It’s about building a safer, more efficient system that either saves you money, attracts more business, or becomes a business in itself.

The key is to use the automation and accuracy of the AI to solve a real-world business problem related to visual content.

Limitations and Considerations

Okay, let’s be real. No tool is perfect.

Google Cloud Vision Safe Search is powerful, but it has limitations you need to be aware of.

Not 100% Accurate: AI models are statistical. They provide likelihoods, not certainties. They can make mistakes. False positives (flagging safe content as unsafe) and false negatives (missing unsafe content) happen. You can’t rely solely on the AI; a human review process for flagged or borderline content is almost always necessary. It’s a filter, not a replacement for human judgment in complex cases.

An image flagged as “LIKELY” violence might just be a piece of art or a historical photo that needs human context.

Context is Key: The AI sees pixels. It doesn’t understand context like satire, art, educational content, or cultural nuances. A medical image is appropriate on a medical forum but not on a social feed. Google Cloud Vision Safe Search can flag it as “Medical,” but *you* need to decide if that’s okay in *your* specific context based on your policies. You need to build the logic *around* the API results.

A picture of a statue might be flagged as Adult by mistake because of anatomical shapes. Humans understand it’s art.

API Integration Required: Google Cloud Vision Safe Search isn’t a drag-and-drop software. You need developers to integrate the API into your existing systems. This requires technical resources and development time. It’s not an off-the-shelf solution for non-technical users.

If you’re not a developer or don’t have one, you’ll need to hire someone or look for third-party solutions built on top of the API.

Cost Can Add Up at High Volume: While the pay-as-you-go model is flexible, processing millions of images per month will incur significant costs. You need to factor this Google Cloud Vision Safe Search into your budget and potentially optimize which images you send to the API (e.g., only scan user uploads, not every image on the site). Scale can be expensive if not managed well.

Estimate your usage and check the pricing tiers carefully to understand the potential costs at scale.

Doesn’t Analyze Video Directly: The Safe Search feature of Vision API is primarily designed for still images. Moderating video content is more complex and usually requires different tools or processing techniques (like extracting frames and analysing them individually, which adds cost and complexity).

If video moderation is a major need, you’ll need to combine Vision API with other tools or look at video-specific analysis services.

Limited Categories: The Safe Search categories are specific (Adult, Spoof, Medical, Violence, Racy). Google Cloud Vision Safe Search won’t detect other types of problematic content like spam text overlaid on images, copyright infringement (unless it’s a known logo perhaps, via other Vision API features), or misinformation presented visually. You might need other tools or methods for those.

Safe Search is great for explicit and harmful imagery, but it won’t catch everything bad in an image.

Understanding these limitations is key to using the tool effectively. It’s a powerful component of a moderation system, but it needs to be part of a broader strategy that includes human review, other detection methods, and clear content policies.

Final Thoughts

So, bottom line?

If you’re running a platform with user-generated visual content, Security and Moderation is not optional.

It’s foundational.

And doing Visual Content Filtering manually at any sort of scale is a losing battle.

Google Cloud Vision Safe Search offers a powerful, scalable, and cost-effective way to automate the first and most difficult part of that process.

It doesn’t replace human moderators entirely.

But it makes them vastly more efficient by handling the bulk of the obvious cases.

This frees up your team to focus on the nuance, the context, and the edge cases that AI can’t handle yet.

The benefits are clear: faster detection, better scale, consistent application of rules, lower operational costs, enhanced user safety, and critical brand protection.

The pay-as-you-go model makes it accessible, whether you’re a startup or a large enterprise.

Yes, Google Cloud Vision Safe Search requires technical integration, and you need to understand its limitations regarding accuracy and context.

But as a core component of a modern content moderation strategy, especially for visual content, it’s a game-changer.

It moves you from reactive clean-up to proactive prevention.

In the digital age, where a single harmful image can cause significant damage, having this kind of automated defence is essential.

It’s a smart investment in the health, safety, and future of your online community.

Ready to see how it fits into your workflow?

Visit the official Google Cloud Vision Safe Search website

Frequently Asked Questions

1. What is Google Cloud Vision Safe Search used for?

Google Cloud Vision Safe Search is used to detect different categories of inappropriate content in images. This includes explicit, violent, medical, and adult themes.

Its main purpose is to help platforms automatically filter and moderate user-uploaded visual content.

2. Is Google Cloud Vision Safe Search free?

It offers a free tier that allows you to process a limited number of images per month without charge.

Beyond the free tier, Google Cloud Vision Safe Search operates on a pay-as-you-go pricing model based on the volume of images processed.

3. How does Google Cloud Vision Safe Search compare to other AI tools?

It’s a leading AI tool for image analysis from Google.

Google Cloud Vision Safe Search competes with similar services like AWS Rekognition and Azure Computer Vision.

Its strengths lie in Google’s extensive data and AI research, offering robust detection categories specifically for safety and moderation.

4. Can beginners use Google Cloud Vision Safe Search?

Using the core API requires technical knowledge or developers to integrate it into a platform.

Beginners would typically use tools or platforms that have already integrated Google Cloud Vision Safe Search behind the scenes.

5. Does the content created by Google Cloud Vision Safe Search meet quality and optimization standards?

Google Cloud Vision Safe Search doesn’t *create* content. It *analyzes* existing images and provides data (scores) about their safety.

This data helps platforms enforce their own quality and content standards for user submissions.

6. Can I make money with Google Cloud Vision Safe Search?

Yes, you can make money by building services on top of the API, such as offering visual moderation as a service to others.

You can also save money and increase profitability on your own platform by using Google Cloud Vision Safe Search to automate and increase the efficiency of your content moderation workflow.