Perspective API helps with Inappropriate Content Flagging easily. Stop sifting manually. Get accurate results fast. See how it works!

The Untold Benefits of Perspective API

Alright, let’s talk brass tacks.

The online world? It’s noisy.

Full of comments, posts, videos, you name it.

And not all of it is sunshine and rainbows.

Think about it.

You’re building a community, a brand, a platform.

You want people to feel safe. To engage without fear.

But then comes the tide of negativity. Harassment. Toxic talk.

Someone’s gotta handle that, right?

Historically, that meant armies of moderators.

Reading every single comment. Judging every single piece of content.

Brutal work. Time-consuming. Emotionally draining.

And frankly, not always consistent.

Now, AI is stepping into the ring.

You’re seeing it pop up everywhere in fields like Security and Moderation.

One tool making waves? Perspective API.

Specifically, for tackling that nasty job: Inappropriate Content Flagging.

This isn’t about replacing humans entirely.

It’s about giving them a superpower.

A way to sift through the noise at scale.

To flag the stuff that needs attention. Fast.

Let’s break down what this thing is and why it matters.

Especially if you’re in the trenches of keeping online spaces clean.

Ready?

Let’s get after it.

Table of Contents:

- What is Perspective API?

- Key Features of Perspective API for Inappropriate Content Flagging

- Benefits of Using Perspective API for Security and Moderation

- Pricing & Plans

- Hands-On Experience / Use Cases

- Who Should Use Perspective API?

- How to Make Money Using Perspective API

- Limitations and Considerations

- Final Thoughts

- Frequently Asked Questions

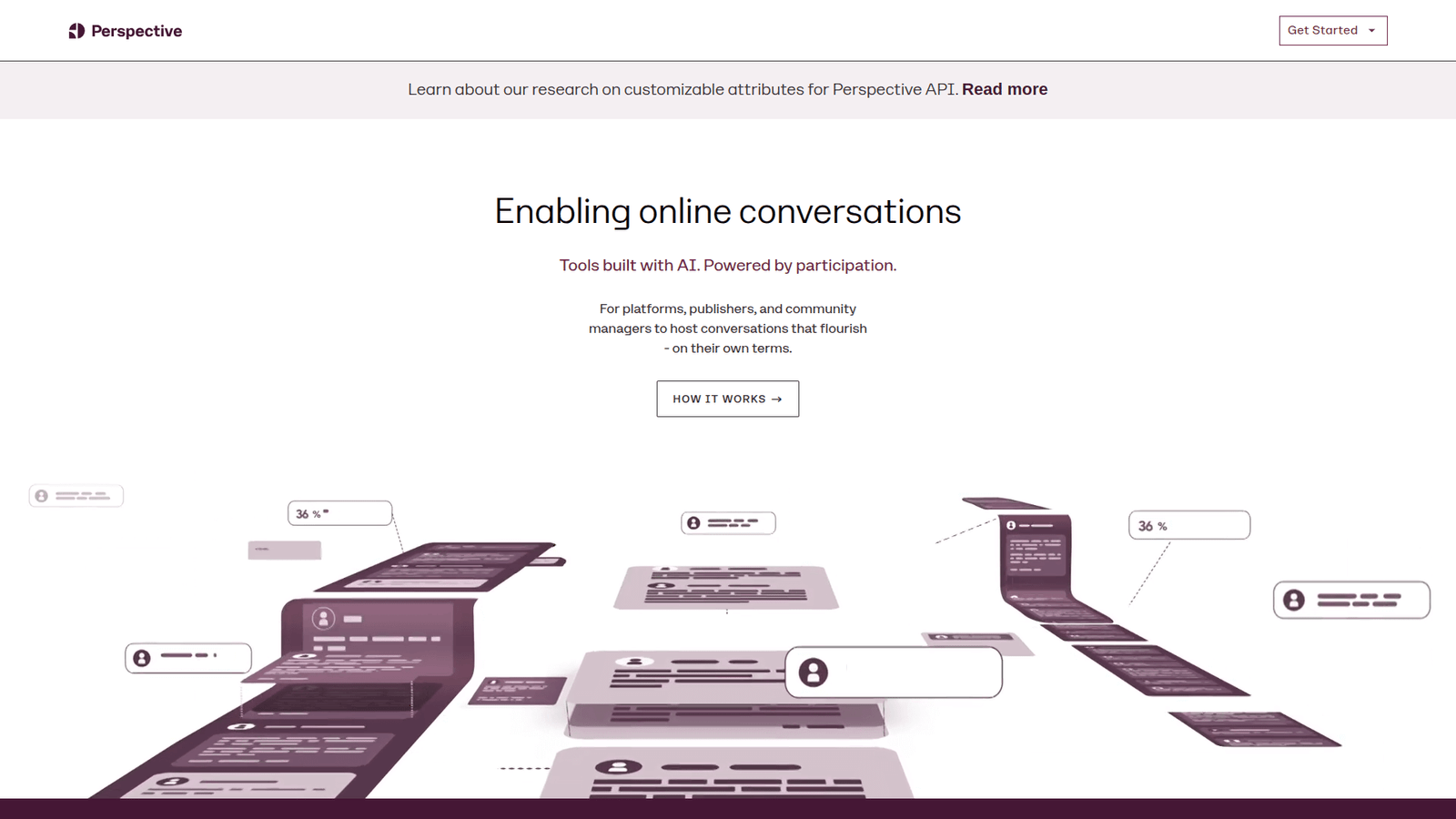

What is Perspective API?

Okay, so what exactly are we talking about here?

Perspective API is an AI tool.

Built by Jigsaw, an incubator within Google.

Its core job?

To analyze text. Specifically, online comments and conversations.

It uses machine learning to score how likely a comment is to be perceived as “toxic” or otherwise harmful.

Think of it as a toxicity detector.

It doesn’t tell you if something is *definitely* bad.

It gives you a score. A probability.

Based on patterns it learned from massive datasets of human-labelled comments.

It’s designed for developers and platforms.

People running websites, forums, social media platforms, game chats, anywhere people talk online.

Their goal? To understand and manage conversation quality.

To identify potentially abusive or inappropriate content.

And to make moderating that content more efficient.

It’s not just about deleting stuff.

It’s about understanding the nature of the conversation.

Identifying trends. Seeing where toxicity is spiking.

And giving moderators tools to intervene effectively.

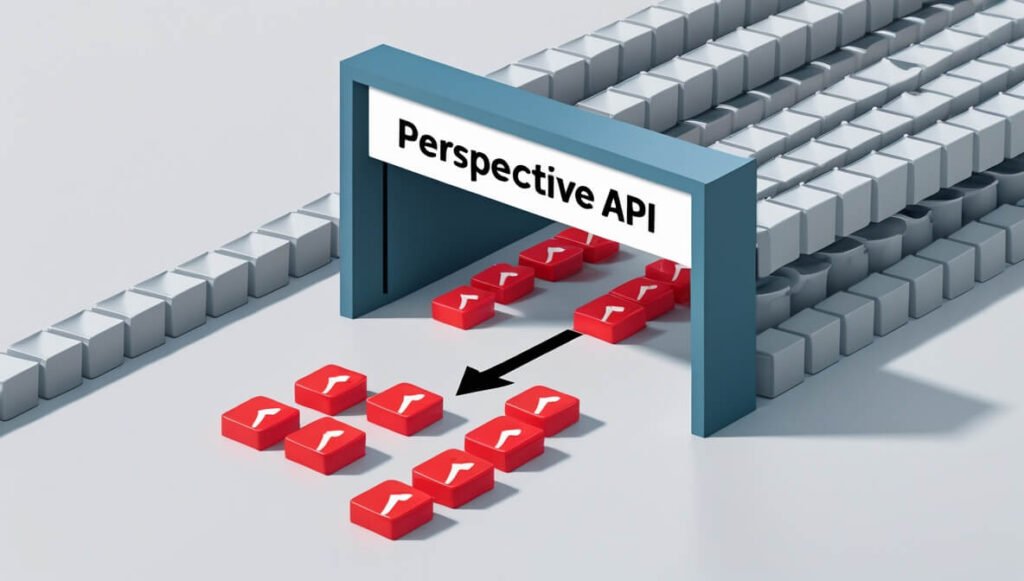

This tool is aimed squarely at the problem of scale.

The internet is huge. Manual moderation can’t keep up.

Perspective API provides an automated layer.

A first pass. A way to highlight the needle in the haystack.

Leaving human moderators to focus on the nuanced cases.

The ones that require human judgment.

It helps with identifying various types of harmful speech.

Which is key for solid Security and Moderation.

And it’s a critical piece of the puzzle for serious Inappropriate Content Flagging.

It’s a technical tool, yes.

You need some dev chops to integrate it.

But the concept is simple: use AI to score conversational risk.

And use those scores to power your moderation decisions.

That’s the gist of it.

A powerful engine for understanding online text.

Focused on making online spaces safer and more productive.

By giving you data-driven insights into toxicity.

Let’s dive into how it actually helps with the nitty-gritty.

Key Features of Perspective API for Inappropriate Content Flagging

Alright, let’s break down the features that make this tool sing.

Specifically, how they help you with the heavy lifting of Inappropriate Content Flagging.

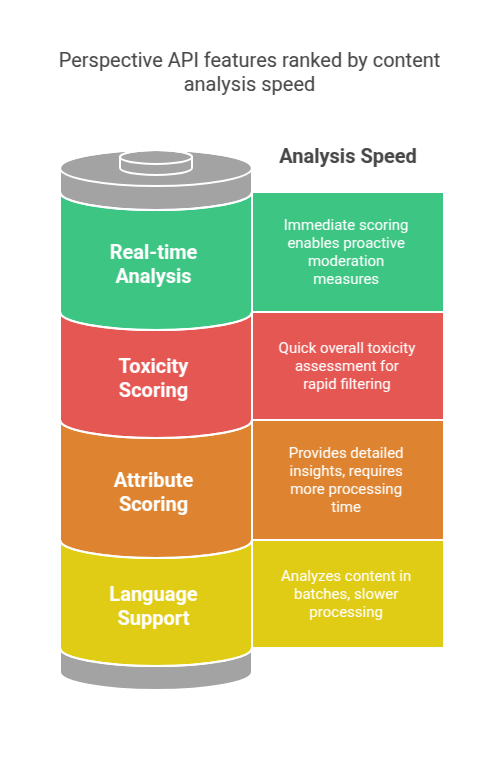

- Toxicity Scoring:

This is the bread and butter.

Perspective API takes a piece of text – a comment, a message – and gives it a score.

A number between 0 and 1.

This number represents the probability that the comment will be perceived as toxic by humans.

Higher number, higher probability of toxicity. Simple.

This score is your primary filter.

You set thresholds.

Maybe anything over 0.7 gets flagged for immediate review.

Maybe anything over 0.9 gets removed automatically (use with caution!).

This automates the first pass.

Instead of reading everything, your system reads everything, scores it, and only the potential problems get highlighted.

Massive time saver.

It’s the engine driving the efficiency in your Security and Moderation workflow.

- Attribute Scoring:

Toxicity isn’t just one thing, right?

It can be insults, threats, identity attacks, sexually explicit language, and more.

Perspective API offers different “attributes” you can ask it to score.

These include things like SEVERITY, IDENTITY_ATTACK, INSULT, PROFANITY, THREAT, SEXUALLY_EXPLICIT, FLIRTATION, and more are being added.

This is powerful because it gives you granularity.

You don’t just know a comment is “toxic.”

You know *why* it’s likely toxic.

Is it an insult? A threat? Profanity?

This helps you tailor your moderation response.

A different policy for insults versus threats, for example.

It allows for more sophisticated filtering and flagging.

You can prioritize review queues based on the type of potential harm.

- Language Support:

The internet isn’t just in English.

Perspective API supports multiple languages.

This is crucial for platforms with international users.

You can’t just moderate in one language and ignore the rest.

The accuracy might vary between languages, of course.

But having capabilities in languages beyond English significantly expands its usefulness.

It means you can apply a consistent level of automated review across your platform.

Regardless of where your users are located or what language they’re typing in.

This isn’t just a nice-to-have.

For global platforms, it’s a necessity for effective Inappropriate Content Flagging.

And it keeps your Security and Moderation strategy robust globally.

- Real-time Analysis:

Speed matters in moderation.

Harmful content spreads fast.

Perspective API is built for real-time analysis.

You can send text to the API as soon as it’s posted.

Get a score back almost instantly.

This allows for immediate action.

You can queue high-scoring comments for review immediately.

Or even hold comments back until they’ve been scored.

Preventing harmful content from being visible at all, if your policy dictates.

This proactive approach is far more effective than reactive cleanup.

Waiting hours or days to find and remove harmful content means the damage is already done.

Real-time flagging lets you potentially catch things before they escalate.

It’s a critical feature for minimizing the impact of toxicity.

Benefits of Using Perspective API for Security and Moderation

Okay, you know the features.

But what’s the actual payoff?

Why bother integrating this thing?

It boils down to a few key wins for your Security and Moderation game.

Saves Serious Time:

This is the big one.

Manual review is slow.

Imagine a busy forum or comments section.

Thousands, maybe millions of posts a day.

No human team can read and evaluate every single one.

Perspective API automates the initial scan.

It flags the likely problems.

Your human moderators now only need to focus on that smaller, flagged subset.

They aren’t sifting through mountains of harmless chatter.

They’re looking at the stuff that actually requires their judgment.

This dramatically reduces the volume of content humans need to touch.

Freeing them up for more complex tasks.

Like policy enforcement, trend analysis, or community engagement.

Less time wasted on the obvious stuff.

More time spent where human intelligence and empathy are needed.

Improves Consistency and Scale:

Humans are, well, human.

Judgments can vary. Fatigue sets in. Bias can creep in.

What one moderator flags, another might let slide.

Perspective API provides a consistent scoring mechanism.

The algorithm applies the same rules, every time, to every comment.

This creates a baseline of consistency in your flagging process.

It also scales effortlessly.

Whether you have 100 comments or 100 million, the API can process them.

Scaling a human moderation team is expensive and difficult.

Scaling an API call? Much simpler.

This consistency and scalability are huge advantages for Inappropriate Content Flagging on growing platforms.

Reduces Moderator Burnout:

Moderation is tough work.

Reading toxic content day in and day out is emotionally draining.

It leads to stress, anxiety, and high turnover.

By using Perspective API to filter out the vast majority of non-problematic content, you reduce the exposure of your human moderators to toxicity.

They only see the content that the AI has flagged as potentially harmful.

This doesn’t eliminate exposure, of course.

They still have to review the bad stuff.

But the sheer volume they have to process is much lower.

This can lead to a healthier, more sustainable moderation team.

Reducing burnout, improving morale, and retaining experienced staff.

Enables Proactive Measures:

With real-time scoring, you can be proactive.

Implement pre-moderation for high-risk content.

Hide comments with high toxicity scores pending review.

Send automated warnings to users whose comments consistently score high.

This shifts your moderation strategy from being purely reactive (“Someone reported this comment, let’s check it”) to proactive (“This comment looks risky, let’s address it before it causes harm”).

It’s a powerful way to maintain a healthier community atmosphere.

By identifying and addressing potential issues before they blow up.

Provides Data Insights:

The API doesn’t just give scores.

It generates data.

You can track toxicity scores over time.

See which topics or events lead to spikes in toxic conversation.

Identify patterns in harmful behaviour.

This data is invaluable for refining your moderation policies.

Understanding your community dynamics.

And making strategic decisions about platform design or interventions.

It turns your Inappropriate Content Flagging from a reactive chore into a data-driven strategy.

Allowing you to get smarter about Security and Moderation over time.

Pricing & Plans

Okay, let’s talk about the money part.

Is this free? What does it cost?

Perspective API isn’t entirely free.

But it does offer a free tier.

This free tier allows for a certain number of API calls per month.

Usually enough to get started, test things out, or run it on a smaller project.

For anything more significant, you’ll need to pay.

The pricing is usage-based.

You pay per API call.

Meaning, you pay for each piece of text you send to the API for scoring.

The cost per call is relatively low.

But it adds up quickly if you have a high volume of content.

The exact pricing depends on which attributes you’re using (Toxicity, Severe Toxicity, Identity Attack, etc.).

Using more attributes on a single comment costs more than just checking for basic toxicity.

They also offer different tiers or discounts for high-volume users.

Enterprises with massive platforms will negotiate custom pricing.

Compared to alternatives?

Other cloud providers (like AWS, Microsoft Azure, Google Cloud’s own Vertex AI) offer similar natural language processing services.

Some might have specific toxicity or content moderation models.

Pricing models vary – some might be per character, others per document, or per API call.

Perspective API’s main focus is on conversational toxicity, which is its strength.

Its pricing is competitive for this specific task.

It’s generally much cheaper than hiring human moderators to do the initial screening work.

Even with the paid tiers, the cost per flagged item is significantly lower.

You need to calculate your expected volume of content.

Estimate how many API calls you’ll make each month.

Then look at their pricing page for the relevant attributes.

That will give you a solid estimate of your monthly cost.

It’s an operational expense, similar to hosting or other software subscriptions.

But one that can pay for itself many times over in saved labor and improved community health.

So, not free forever, but the pricing is structured to scale with your needs.

And designed to be cost-effective compared to the manual alternative for serious Inappropriate Content Flagging.

Hands-On Experience / Use Cases

Reading about features is one thing.

Seeing it in action is another.

How does Perspective API actually play out in the real world?

Let’s look at some scenarios where it’s a game-changer for Security and Moderation.

Large News Websites:

Comment sections on news sites can be brutal.

They attract high volume and often heated debates.

Manual moderation is impossible at scale.

Publishers use Perspective API to pre-screen comments.

Comments below a certain toxicity threshold might be automatically approved.

Comments above that threshold get sent to a human moderation queue.

The highest-scoring comments might be held back entirely pending human review.

This allows millions of comments to be processed daily.

Ensuring that civil discussion is facilitated.

While potentially harmful or abusive comments are caught before they derail the conversation.

It improves the quality of the comment section significantly.

Gaming Platforms:

In-game chat and forums are notorious for toxicity.

Slurs, harassment, cheating discussions.

Real-time moderation is key because games move fast.

Gaming companies integrate Perspective API into their chat systems.

Messages are scored as they are sent.

High-scoring messages can trigger automated actions: muting a player, issuing a warning, or flagging the player’s account for review.

They can use attribute scoring to differentiate between types of harm (e.g., profanity vs. targeted harassment).

This creates a safer environment for players.

Reducing player-to-player abuse.

And making the gaming community more welcoming.

It’s a direct application of Inappropriate Content Flagging for better player retention.

Online Communities and Forums:

Any platform where users interact.

Discussion forums, online courses with discussion boards, membership sites.

Maintaining a positive community culture is vital.

Community managers use Perspective API to help monitor posts and comments.

Flagging discussions that are getting out of hand due to toxicity.

Identifying users who are consistently posting harmful content.

This helps moderators intervene early.

De-escalate situations.

And enforce community guidelines consistently.

It allows community managers to spend more time fostering positive interactions.

And less time cleaning up messes.

Social Media Platforms:

The biggest challenge.

Billions of posts and comments daily.

Perspective API, or similar internal tools built on the same principles, is essential here.

They use it for detecting harassment, hate speech, bullying, and other harmful content at massive scale.

Powering features like filtering replies, sorting comment feeds, and flagging content for human review teams.

While no system is perfect for social media’s complexity, AI like Perspective API is a foundational technology for any large platform trying to combat online abuse.

In all these cases, the pattern is the same:

High volume of user-generated content.

Need to identify potentially harmful parts of that content.

Need to do it fast and at scale.

Perspective API provides the automated layer to make this possible.

Allowing human moderators to focus on the trickier cases.

That’s the real-world impact.

Who Should Use Perspective API?

So, who is this tool actually for?

If you’re running a personal blog with five comments a week, probably overkill.

But if you’re dealing with user-generated content on a larger scale, pay attention.

Here’s who benefits most:

Platform Owners and Developers:

Anyone building or managing a platform where users can post text.

Forums, community sites, app comments, in-game chat, review sections.

You’re the ones who need to integrate the API into your system.

You’re responsible for setting up the calls, handling the responses, and building the moderation workflow around the API’s scores.

Moderation Team Leads and Managers:

If you manage a team of human moderators, Perspective API can be your best friend.

It allows you to triage your queues effectively.

Ensure consistency in initial flagging.

And potentially reduce the size or cost of your manual review operations as volume grows.

Gaming Companies:

As mentioned, gaming is a huge use case.

Toxicity in games drives players away.

Perspective API helps identify harassment and abuse in real-time chat.

Keeping communities safer and more welcoming.

News and Media Publishers:

Managing comment sections is a major challenge.

They use Perspective API to filter and manage comments on articles.

Promoting civil discussion while filtering out harmful noise.

Anyone Building Large Online Communities:

From large-scale forums to educational platforms or marketplace review sections.

If user comments and contributions are a core part of your platform.

And you struggle to keep up with manual moderation.

Perspective API offers a scalable solution for Inappropriate Content Flagging.

Essentially, if you have user-generated text content.

If that content volume is significant.

And if dealing with toxicity and harmful content is a problem for you.

Then Perspective API is a tool you should seriously look into.

It’s built for scale and consistency.

Solving a real problem in Security and Moderation.

And it can save you a ton of headaches (and money).

How to Make Money Using Perspective API

Okay, let’s flip the script a bit.

It’s a tool for platforms.

But can you use it to make money yourself?

Yes, indirectly.

Perspective API isn’t a tool you use to “create content” to sell.

It’s an infrastructure tool.

So, making money involves offering services *based* on its capabilities.

Here’s how you might do it:

- Offer Content Moderation Services:

Okay, this might sound counter-intuitive. Isn’t the tool supposed to reduce the need for humans?

Yes, for the initial pass.

But human review is still necessary for nuanced cases.

You can offer a moderation service to smaller platforms or businesses.

Use Perspective API to handle the first layer of Inappropriate Content Flagging and filtering.

Then have your team review only the flagged content.

This allows you to offer a more efficient and cost-effective moderation service than one based purely on manual review.

You charge your clients for moderation.

Your costs for the API calls are lower than the labor cost of reviewing everything manually.

That’s your margin.

It leverages the API’s efficiency to make your service profitable.

- Develop Moderation Plugins/Extensions:

Many smaller platforms (like certain forum software, e-commerce sites with reviews, etc.) might not have in-house developers or the expertise to integrate an API like Perspective.

You could develop plugins or extensions for these platforms that integrate Perspective API.

Charge a fee for the plugin itself or a subscription for the service built on top of it.

You’d handle the API integration and potentially some light-touch moderation or reporting features.

This productizes the API’s functionality for a less technical audience.

It’s a way to bring sophisticated Security and Moderation capabilities to platforms that wouldn’t otherwise access them.

- Provide Consultancy on Content Moderation Strategy:

Platforms often struggle with *how* to moderate. What policies to set? How to handle false positives/negatives? How to integrate automation?

If you become an expert in using Perspective API and other moderation tools, you can offer consultancy services.

Advise companies on building their moderation stack.

Help them integrate Perspective API effectively.

Train their teams on using the data provided by the API.

Your expertise in leveraging this tool for efficient Inappropriate Content Flagging is the valuable asset here.

Charge for your time and knowledge.

Case Study Idea (Hypothetical):

Imagine a small online course provider. They have a thriving student community forum. Comments started getting a bit wild. Moderation was ad-hoc.

Enter “Sarah,” a freelance moderation specialist.

Sarah integrates Perspective API into their forum software via a custom script she wrote.

She sets up thresholds: low toxicity gets posted immediately, medium goes into a ‘likely fine’ queue Sarah checks daily, high goes into a ‘needs urgent review’ queue.

Sarah charges the course provider a monthly retainer.

Her time spent moderating is significantly reduced because the API does the initial sort.

She focuses only on the flagged content.

The course provider gets a cleaner, safer community forum.

Sarah makes a profit because the API makes her work efficient.

She might be spending a few hours a day on review, powered by the API doing the heavy lifting 24/7.

She leverages the API’s efficiency into a profitable service offering.

So, you’re not selling the API itself.

You’re selling services or products that are made better, faster, or cheaper by using the API.

That’s how you turn an infrastructure tool into a revenue stream.

Limitations and Considerations

No tool is perfect.

Perspective API is powerful, but it has its limits.

Understanding these is crucial before you rely on it completely for your Inappropriate Content Flagging.

Accuracy Isn’t 100%:

It’s a machine learning model.

It predicts based on patterns.

It will make mistakes.

It will have “false positives” (flagging harmless content as toxic).

It will have “false negatives” (missing truly toxic content).

Context is hard for AI. Sarcasm, inside jokes, nuanced discussions.

Things that humans understand easily can trip up the model.

This is why human review is still essential, especially for complex or ambiguous cases.

Bias:

AI models learn from data.

If the data used to train the model contains biases, the model can perpetuate them.

Perspective API’s models have shown bias in the past.

For example, flagging comments mentioning certain identity terms (like LGBTQ+ related words) as more toxic, even when they weren’t.

Jigsaw is actively working to mitigate these biases.

They release models designed to reduce this.

But it’s a known challenge in AI.

You need to be aware of potential bias and monitor for it in how the API performs on your specific content.

Integration Requires Technical Skills:

This isn’t a plug-and-play tool for end-users.

It’s an API.

You need developers to integrate it into your platform’s backend.

You need to write code to send text to the API, receive the scores, and then build logic based on those scores (flagging, hiding, sending for review, etc.).

This isn’t a barrier if you have development resources.

But it means smaller operations without technical staff can’t just sign up and start using it without help.

Defining “Toxicity” and Policies:

The API scores based on a general definition of toxicity.

But your platform’s specific policies might differ.

What’s acceptable in one community might be banned in another.

You need to clearly define your own moderation policies.

And then map the API scores and attributes to those policies.

What score triggers a warning? What score triggers a ban?

This mapping requires careful thought and calibration.

The API gives you data, but you have to decide how to act on it.

Cost Can Scale Up:

As mentioned, it’s usage-based pricing.

If your content volume explodes, so does your bill.

You need to budget for this and monitor your usage.

Ensure the efficiency gains are worth the cost.

Perspective API is a powerful tool for Security and Moderation, specifically for Inappropriate Content Flagging.

But it’s a tool to augment, not replace, human judgment and careful policy design.

Be aware of its limitations, plan for integration, and use it wisely.

Final Thoughts

Alright, let’s wrap this up.

Dealing with Inappropriate Content Flagging online is tough.

It’s necessary work if you’re building any kind of online community or platform.

Manual methods don’t scale.

They’re expensive, slow, and burn out your team.

AI tools like Perspective API are not the silver bullet.

But they are a significant step forward.

They automate the tedious, high-volume task of sifting through mountains of content.

They provide a consistent, data-driven first layer of analysis.

Allowing human moderators to focus on the difficult, nuanced decisions only they can make.

Perspective API’s core strength lies in scoring conversational toxicity and specific harmful attributes.

Its real-time capability and multi-language support make it suitable for global, high-volume platforms.

The benefits are clear: time savings, improved consistency, reduced moderator stress, and data for better policy.

Yes, there are limitations.

It’s not perfect, requires technical integration, and isn’t free at scale.

You need to understand its biases and plan your policies carefully.

But if you’re serious about Security and Moderation on your platform.

And you’re dealing with a volume of user content that’s becoming unmanageable manually.

Perspective API is absolutely worth exploring.

It’s a tool built for this specific problem.

Developed by a team dedicated to combating online harassment.

It’s not just about removing bad stuff.

It’s about creating better online spaces.

More welcoming, more productive, less hateful.

Perspective API can help you get there.

It’s an investment in your community health and the sustainability of your platform.

If you’re nodding your head, thinking “Yep, that’s my problem,” then the next step is simple.

Check out the official documentation.

Look at the pricing.

Talk to your development team about integration.

See if this tool is the right fit to take your Inappropriate Content Flagging to the next level.

Visit the official Perspective API website

Frequently Asked Questions

1. What is Perspective API used for?

Perspective API is used to analyze text, like online comments and messages, to predict how likely they are to be perceived as toxic or harmful by humans. It helps platforms identify and manage inappropriate content at scale.

2. Is Perspective API free?

Perspective API offers a free tier for a limited number of API calls per month. For higher usage volumes, it operates on a usage-based pricing model where you pay per API call.

3. How does Perspective API compare to other AI tools?

While other AI tools offer general natural language processing, Perspective API is specifically focused and trained on identifying conversational toxicity and various attributes of harmful speech. This specialization is its key differentiator for content moderation tasks.

4. Can beginners use Perspective API?

Perspective API is an API, meaning it requires technical integration into a platform’s backend. It’s not a simple web application for everyday users. Developers are needed to implement and configure it.

5. Does the content created by Perspective API meet quality and optimization standards?

Perspective API does not “create” content. It analyzes existing text to provide toxicity scores. The quality and optimization of the original content are independent of the API’s function. It’s a tool for moderation and filtering, not content generation.

6. Can I make money with Perspective API?

Yes, you can make money indirectly by using Perspective API to power services like efficient content moderation for clients, developing moderation plugins for platforms, or offering consultancy on content moderation strategies. You leverage the API’s efficiency to provide a valuable service.