Hive Moderation is a game-changer for Spam Detection and Content Moderation. Stop wasting time on manual reviews and boost efficiency. Discover the power today!

Is Hive Moderation Right for Your Spam Detection and Content Moderation Needs?

Alright, let’s talk Security and Moderation.

Specifically, the headaches that come with Spam Detection and Content Moderation.

It’s a massive time sink, right?

Hours spent sifting through junk, dealing with inappropriate stuff, just trying to keep your platform clean.

Manual review?

It’s a treadmill to nowhere.

You clear the queue, take a breath, and bam – more spam, more questionable content.

It never stops.

And let’s be real, humans make mistakes.

Stuff gets missed.

Good content gets flagged.

It’s inconsistent.

The rise of AI tools is changing things.

They’re popping up everywhere, promising solutions to these exact problems.

One name you hear a lot in this space?

Hive Moderation.

People in Security and Moderation are looking at it.

Asking the big questions.

Can this thing actually handle the sheer volume of spam and content that needs checking?

Will it save time?

Will it be accurate?

Is it just another piece of software that adds complexity instead of simplifying things?

This article is going to cut through the noise.

We’re looking at Hive Moderation, specifically for Spam Detection and Content Moderation.

Is it the real deal?

Is it worth your time and money?

Let’s find out.

Table of Contents

- What is Hive Moderation?

- Key Features of Hive Moderation for Spam Detection and Content Moderation

- Benefits of Using Hive Moderation for Security and Moderation

- Pricing & Plans

- Hands-On Experience / Use Cases

- Who Should Use Hive Moderation?

- How to Make Money Using Hive Moderation

- Limitations and Considerations

- Final Thoughts

- Frequently Asked Questions

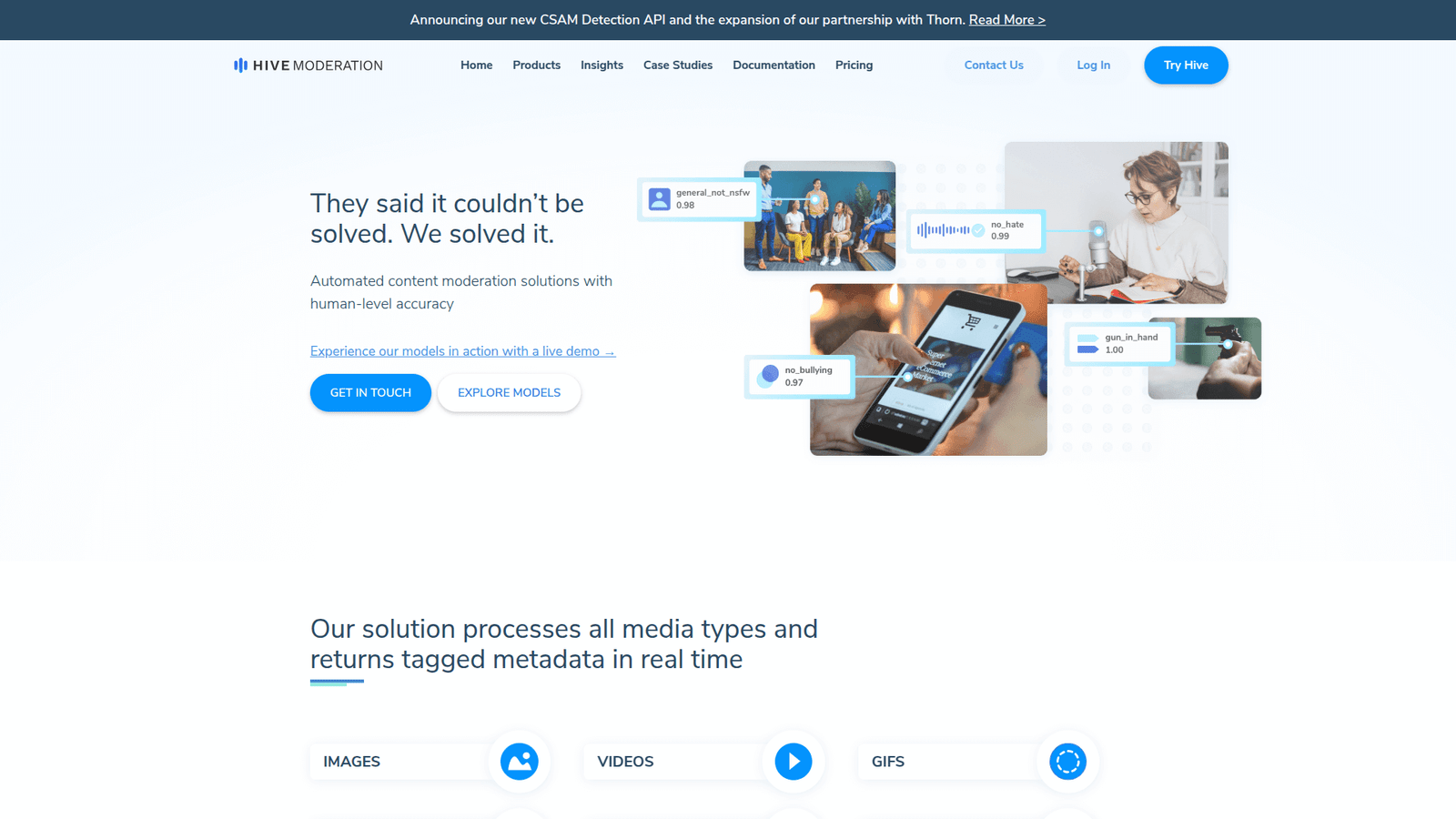

What is Hive Moderation?

So, what exactly is Hive Moderation?

Think of it as an AI powerhouse built for checking content.

Its main job?

To help platforms, businesses, and communities deal with the massive amount of user-generated content they receive.

This includes everything from text comments, images, videos, and audio files.

The goal is simple: automate the process of finding and flagging content that violates rules or policies.

This is huge for anyone managing online platforms.

Whether it’s a social media site, a marketplace, a forum, or even just the comments section on a blog.

The volume can be insane.

And letting bad stuff slip through is a huge risk.

It can damage your brand, alienate users, and even lead to legal problems.

Hive Moderation uses advanced machine learning models.

These models are trained on massive datasets to recognise patterns associated with different types of undesirable content.

This isn’t just about finding swear words.

It’s about identifying complex issues like hate speech, harassment, graphic violence, fraudulent activity, and yes, lots and lots of spam.

The tool provides APIs, which means developers can integrate its capabilities directly into their own applications and platforms.

This makes it a backend solution for managing content at scale.

It’s designed for businesses that need robust, automated Spam Detection and Content Moderation capabilities.

It’s not really a tool for individuals writing blog posts or social media updates, unless you’re building the platform itself.

It’s aimed at developers, product managers, and operations teams responsible for platform health and safety.

It offers various APIs tailored to different content types and moderation needs.

You can use separate models to check images, videos, text, or audio for specific types of policy violations.

The platform processes the content, analyses it using its AI models, and returns a structured response.

This response typically includes a probability score for different categories of harmful content.

Based on these scores, you can set up automated rules.

For example, if a piece of content scores high for spam, you can automatically delete it or flag it for human review.

If it scores high for graphic violence, you might remove it instantly.

This automates the first pass of moderation, freeing up human moderators to focus on borderline cases that require nuanced judgment.

It significantly speeds up the moderation process.

Instead of a human having to look at every single piece of content, they only see the stuff the AI isn’t 100% sure about.

This means you can handle a much larger volume of content with the same team size.

It brings consistency to moderation decisions.

AI models apply the same rules to every piece of content, reducing the variability that can occur with human reviewers.

While it requires technical integration, the promise is a more efficient, scalable, and consistent approach to keeping online platforms safe and clean.

Key Features of Hive Moderation for Spam Detection and Content Moderation

Alright, let’s break down what Hive Moderation actually does, specifically for sniffing out spam and bad content.

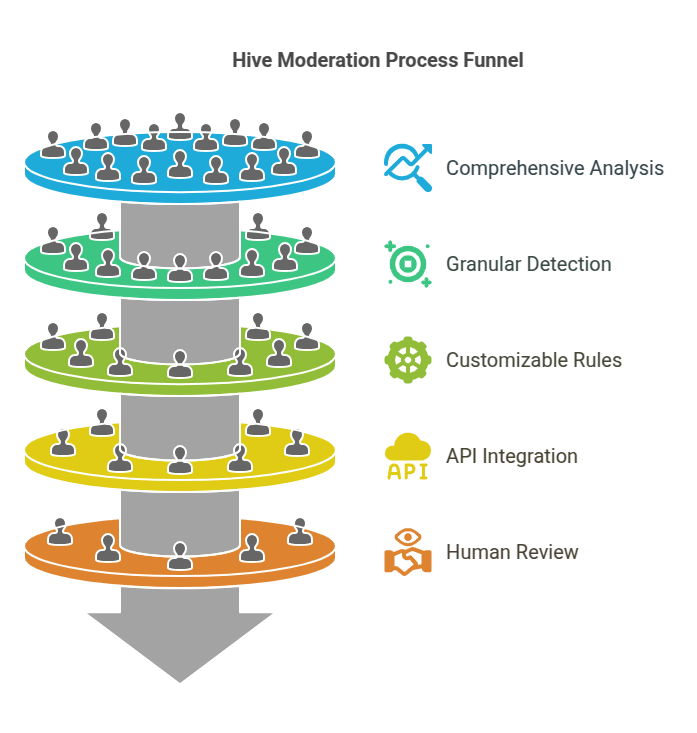

- Comprehensive Content Analysis: This isn’t just about checking text. Hive Moderation handles images, videos, audio, and even livestreams. Why does this matter for spam and moderation? Spammers and bad actors use every medium. They embed links in images, hide messages in video descriptions, or use audio clips for malicious purposes. A tool that only checks text misses a massive amount of harmful content. Hive’s ability to scan multiple types of content simultaneously means a much more robust defense against spam and policy violations. It ensures you’re not just playing whack-a-mole with one content type while ignoring others. It gives you a unified system to catch issues across your entire platform.

- Granular Policy Detection: Hive offers models trained to detect specific types of harmful content beyond generic spam. We’re talking hate speech, harassment, graphic content, child exploitation material, illegal drugs, weapons, and more. For Spam Detection and Content Moderation, this granular approach is key. You can set different thresholds and actions for different violation types. Spam might get auto-deleted, while hate speech might trigger an immediate ban. This level of detail allows platforms to enforce specific community guidelines accurately and consistently. It goes beyond simple keyword matching, understanding context and visual cues.

- Customizable Rule Engine: You can configure Hive Moderation’s responses based on the scores it returns. This is crucial because not all platforms have the exact same rules. You can define what percentage threshold for a specific category (like spam or hate speech) triggers a certain action (like auto-flagging, soft-blocking, or immediate removal). This flexibility means you can tailor the AI’s behavior to match your specific community standards and risk tolerance. It’s not a one-size-fits-all solution; you can fine-tune it. This allows for a more nuanced approach than simple blocklists, which are easily bypassed.

- API-First Design: Hive Moderation is designed to be integrated directly into existing workflows and platforms via APIs. This isn’t a standalone application you log into every morning to check. It works in the background, analyzing content as it’s uploaded or posted. For large platforms dealing with millions of pieces of content daily, this automation is non-negotiable. It enables real-time analysis and action, preventing harmful content from even becoming visible to users in many cases. This saves immense amounts of time and resources compared to manual review queues.

- Human Review Integration: While AI does the heavy lifting, Hive also supports integrating human review into the workflow. Content that the AI flags as potentially problematic but falls below a certain confidence threshold can be routed to a human moderation team. This creates a hybrid system that leverages the speed and scalability of AI for clear-cut cases and the judgment and nuance of humans for complex or ambiguous situations. This ensures that fewer false positives occur and genuinely problematic content doesn’t slip through the cracks. It’s the best of both worlds for effective moderation.

- Training Data and Continuous Improvement: Hive maintains large datasets of labeled content used to train and improve its AI models. The quality and breadth of the training data are critical for accuracy. As new types of spam or harmful content emerge, the models can be updated to recognise them. This means the system gets smarter over time and adapts to the ever-evolving tactics of spammers and bad actors. It’s not a static tool; it’s continuously learning and improving its detection capabilities, staying relevant in the face of new threats.

- Scalability: The platform is built to handle massive volumes of content. This is essential for rapidly growing platforms or established ones with millions of users. You don’t want your moderation system to become a bottleneck. Hive’s infrastructure is designed to process content requests at scale, ensuring that moderation keeps pace with user activity. This prevents backlogs and maintains a clean user experience even during peak periods. Scalability is a non-negotiable requirement for modern online platforms.

These features combine to create a powerful system for tackling the challenge of Spam Detection and Content Moderation.

It’s not just about finding obvious junk.

It’s about catching sophisticated spam, identifying harmful content across different formats, and doing it all at scale.

This frees up human moderators to focus on the tough calls.

And keeps your platform safer and cleaner for everyone.

Which is the whole point, right?

Benefits of Using Hive Moderation for Security and Moderation

Okay, so you know what it is and what it does.

But what’s in it for you?

Why should someone responsible for Security and Moderation even look at Hive Moderation?

Simple.

It solves real problems.

Here’s the breakdown of the core benefits:

Massive Time Savings: This is probably the biggest one. Manual moderation is a black hole for time. Your team spends hours every day sifting through content, much of which is clearly spam or violates policy. Hive automates the initial review. It flags the vast majority of clear-cut violations automatically. This means your human moderators only deal with the complex cases. Imagine giving your team back hours every single day. They can focus on edge cases, policy refinement, or proactive safety initiatives instead of being buried under a mountain of junk. Time is money, and Hive saves a ton of it.

Improved Consistency and Accuracy: Human judgment is subjective. What one moderator flags, another might miss. This inconsistency leads to user frustration and creates loopholes for spammers. AI models, once trained, apply the same rules every single time. This leads to far greater consistency in moderation decisions. While not 100% perfect, AI can catch patterns and volume that humans simply can’t. For high-volume platforms, AI accuracy, especially when combined with human review, often surpasses manual methods alone. This builds user trust because they know the rules are being applied fairly.

Enhanced Scalability: As your platform grows, so does the volume of content. Hiring enough human moderators to keep up is incredibly expensive and logistically challenging. Hive Moderation scales automatically with your content volume. Whether you have a thousand users or a million, the AI can process the content. This allows you to grow your platform without the moderation bottleneck. It’s a scalable solution for a scalable problem. This is critical for businesses aiming for rapid growth.

Faster Response Times: Harmful content can spread incredibly quickly online. Manual review queues mean a delay between content being posted and it being moderated. This delay can cause significant damage. Hive processes content in near real-time. Clear violations can be flagged and removed within seconds of being posted. This drastically reduces the exposure time for harmful content, protecting your users and your brand reputation immediately. Speed matters in moderation.

Reduced Burnout for Moderation Teams: Moderating harmful content is emotionally draining work. Dealing with graphic images, hate speech, and harassment takes a toll. By automating the review of the most egregious and high-volume content (like spam), Hive reduces the exposure of your human moderators to the worst material. They can focus on more challenging, less repetitive tasks, leading to less burnout and higher job satisfaction. A healthier team is a more effective team.

Better User Experience: A platform overrun with spam and harmful content is a bad experience for everyone. Users leave. By using Hive Moderation for effective Spam Detection and Content Moderation, you create a cleaner, safer environment. This encourages positive user interaction and retention. A clean platform attracts more users and keeps them coming back.

Cost Efficiency: While there’s an investment in integrating and using Hive, the cost is often significantly lower than hiring and managing a large team of human moderators, especially as volume increases. You save on salaries, benefits, training, and management overhead. Over time, the operational savings can be substantial, making it a smart financial decision for scalable moderation.

These benefits aren’t theoretical.

They translate directly into a healthier, more efficient, and more scalable operation for anyone in charge of keeping an online platform clean.

It’s moving from reactive damage control to a proactive, automated defense system.

And that’s a game-changer.

Pricing & Plans

Alright, let’s talk money.

Does Hive Moderation cost a fortune?

How do they charge?

Hive Moderation uses a usage-based pricing model.

This means you pay based on the amount of content you process through their APIs.

It’s not a flat monthly fee based on user numbers or a subscription with rigid tiers that don’t fit your needs.

This model is common for API-based services and can be cost-effective if your usage fluctuates.

They typically price based on ‘units’ of content processed.

A unit might be one image analysis, one minute of video analyzed, one text submission, or one minute of audio.

The exact definition of a unit and the cost per unit vary depending on the specific API you are using (e.g., image moderation vs. text moderation vs. video moderation).

Different detection models might also have different costs.

For example, detecting basic spam in text might be cheaper than detecting complex hate speech in a video.

Hive offers volume discounts.

The more content you process, the lower the cost per unit becomes.

This makes it more economical for larger platforms with high volume.

You’ll need to check their official pricing page for the exact current rates, as these can change.

They usually have a pricing calculator or provide custom quotes for very high-volume users.

Is there a free plan?

Hive typically offers a free tier or free credits for testing and development.

This allows you to integrate the APIs and test them with your content before committing to significant spending.

The free tier usually has limits on the number of units you can process per month.

For production use, you will move to a paid model based on your actual usage.

How does it compare to alternatives?

Comparing AI moderation pricing is tricky because different services offer different models and charge in different ways.

Some might have subscription tiers, others usage-based like Hive.

The key is to compare the cost per relevant unit of work for the specific types of moderation you need.

Factors like accuracy, speed, and the breadth of content types supported also play a huge role in the value proposition, not just the sticker price per unit.

A cheaper service that misses a lot of harmful content isn’t actually cheaper in the long run due to the damage it causes.

Hive is generally considered competitive in the AI moderation space, particularly for platforms needing comprehensive coverage across different content types.

For small projects or very low volume, the usage-based model might feel slightly less predictable than a fixed monthly fee, but for anything with scaling content, it aligns costs directly with value delivered.

The investment is in integrating the API and then the ongoing cost based on how much content you need to check.

It’s a cost that grows with your platform’s activity.

If you have significant content volume that requires moderation, Hive’s model is built for that scale.

You’re paying for powerful AI that works constantly, freeing up expensive human time.

This is where the cost savings become clear compared to expanding a manual team.

Get those free credits, test it out with your specific content types, and see what your estimated usage costs would look like.

That’s the smart way to evaluate if the pricing fits your budget and projected needs.

Hands-On Experience / Use Cases

Okay, enough with the theory.

How does Hive Moderation actually work in the real world?

Let’s look at some practical examples or simulated use cases.

Imagine you run a busy online community platform.

Users are posting text comments, uploading profile pictures, and sharing videos.

Spam is a constant issue – phishing links, unsolicited ads, duplicate posts.

You also get users posting inappropriate images, sharing videos with copyrighted music, or leaving hateful comments.

Before Hive, your team manually reviewed flags reported by users.

This was slow, reactive, and missed a lot.

You decide to integrate Hive Moderation.

When a user posts a comment, the text is sent to the Hive Text Moderation API.

It returns scores for categories like spam, hate speech, harassment, and profanity.

You’ve configured your system:

– Spam score > 90%: Delete comment automatically.

– Hate speech score > 80%: Flag for immediate human review and temporarily hide the comment.

– Profanity score > 70%: Censor specific words.

When a user uploads a profile picture, the image is sent to the Hive Image Moderation API.

It checks for things like nudity, graphic content, and hate symbols.

– Nudity score > 95%: Reject image and notify user.

– Hate symbol score > 85%: Reject image and flag user account for review.

When a user uploads a video, it goes to the Hive Video Moderation API.

This API checks frames and audio over time.

It can detect visual content violations, copyrighted music in the audio track, and even identify logos of terrorist organizations.

– Graphic violence detected: Auto-remove video.

– Copyrighted music detected: Mute audio or flag for review.

– Spam text overlays detected: Flag video.

What’s the result of this integration?

Spam is caught and removed almost instantly, often before any user sees it.

This drastically reduces the amount of junk on the platform.

The volume of content requiring human review drops significantly.

Your moderators spend less time on obvious stuff and more time on the tricky edge cases.

Consistency in applying rules improves because the AI handles the clear-cut cases uniformly.

The platform feels cleaner and safer for users.

Another use case: a large online marketplace.

Sellers post product listings with images and descriptions.

Spam listings (selling prohibited items, pyramid schemes) and misleading product images are rampant.

Hive Moderation can scan listing titles and descriptions for spammy keywords or phrases, and check product images for restricted items (like weapons or counterfeit goods).

Listings that score high for spam or prohibited items are automatically rejected or sent to a priority human review queue.

This protects buyers and maintains the integrity of the marketplace.

Think about online gaming platforms.

Toxic behaviour in voice chat and text chat is a huge problem.

Hive Audio and Text Moderation APIs can analyse communications in real-time.

Detecting hate speech, harassment, or threats and allowing the platform to take action – muting users, issuing warnings, or banning repeat offenders – instantly.

This creates a better gaming experience for everyone.

In all these cases, the usability isn’t in a user interface for end-users.

It’s in the ease of integrating the APIs for developers.

The results are seen in the reduced volume of spam and harmful content making it onto the platform.

It’s working behind the scenes to keep things clean.

And that’s its true power in Security and Moderation.

Who Should Use Hive Moderation?

So, who exactly is Hive Moderation built for?

It’s not a tool for everyone.

As we’ve discussed, it’s an API-first platform.

This means it’s primarily for businesses and developers who are building or managing platforms where users can create and share content.

Here are the ideal profiles:

Social Media Platforms: Any platform where users post updates, images, videos, and comments faces massive moderation challenges. Hive is perfect for automating the first pass of content review.

Online Marketplaces: E-commerce sites where users list products need to detect fraudulent listings, prohibited items, and spam. Hive’s image, text, and video analysis is highly relevant here.

Gaming Platforms: Managing toxic chat (text and voice) and inappropriate user-generated content (like custom avatars or level designs) requires robust AI. Hive’s audio and image analysis fits this need.

Forums and Community Sites: Websites with user forums or comment sections need to combat spam, hate speech, and harassment in text.

EdTech Platforms: Platforms where students or teachers share content need to ensure the environment is safe and free from inappropriate material. This is critical for protecting minors.

Dating Apps: Filtering inappropriate profile pictures and text messages is essential for user safety and experience.

Hosting Providers (User-Generated Content): Any service that hosts user-uploaded files (images, videos, documents) and needs to scan them for illegal or harmful content.

Corporate Communication Platforms: Internal tools where employees can share content might still need moderation to prevent harassment or the sharing of inappropriate material.

Businesses with Large User-Generated Review Sections: Websites featuring customer reviews need to filter out spam, fake reviews, and inappropriate language.

Essentially, if your business operates an online platform where users contribute content, and that content needs to be checked against policies (for safety, legality, or community standards), Hive Moderation is a strong candidate.

It’s particularly valuable for platforms dealing with high volume.

If you only get a handful of comments a day, manual review is probably fine.

If you get thousands or millions of pieces of content daily, you need automation.

It requires technical resources to integrate the APIs.

So, it’s for businesses with development teams capable of implementing the integration.

It’s not a plug-and-play solution for non-technical users.

If you’re a blogger writing articles or a marketer creating social media posts, this isn’t the tool for your daily tasks.

But if you are building the next social network, marketplace, or community platform, and Spam Detection and Content Moderation are critical functions, then Hive Moderation is definitely on your radar.

It’s for those who need to build scalable, efficient, and consistent moderation systems into the core of their product.

How to Make Money Using Hive Moderation

Okay, can you actually make money *using* Hive Moderation?

Not directly in the way you might make money writing articles with a text AI or creating art with an image AI.

Hive Moderation is a B2B service, primarily used by platforms themselves.

So, the money-making potential isn’t about using the tool to *create* something sellable, but about using it to *enable* a business that makes money.

Here’s how businesses using Hive Moderation can indirectly boost revenue or create business opportunities:

- Build and Offer Moderation-as-a-Service: If you are a development agency or a tech company, you could build custom platform solutions for clients (e.g., a small social network, a community forum, a marketplace). You integrate Hive Moderation into these platforms as part of your service offering. You charge your clients for building and maintaining their platform, including the integrated, automated moderation capabilities powered by Hive. Your clients get a clean, safe platform without needing in-house AI expertise, and you generate revenue from the development and service fees. This is a significant value-add you can offer.

- Improve User Retention on Your Platform: A platform overrun by spam, scams, and harassment loses users quickly. Users leave because they feel unsafe or annoyed. By implementing robust Spam Detection and Content Moderation with Hive, you create a much better user experience. Users stay longer, are more engaged, and are more likely to spend money on your platform (through subscriptions, purchases, ads, etc.). This directly impacts your platform’s profitability by increasing user lifetime value and attracting new users through positive word-of-mouth. A clean platform is a profitable platform.

- Attract and Retain High-Value Users: Some types of content are critical for specific niches but also attract spam or inappropriate behaviour. For example, a platform for artists sharing their work might need strong moderation against non-art spam or explicit content. By ensuring a clean environment using Hive, you make your platform attractive to the specific, often higher-value, users you want to target, who appreciate a professional and safe space. This can lead to premium subscriptions or other revenue streams.

- Enable New Business Models Safely: Want to add user-generated content features to an existing service? Maybe allow comments on product pages, enable user profiles with images, or even launch a mini-community within your app. The fear of moderation headaches often prevents businesses from adding these features. With Hive, you can confidently enable user-generated content, knowing you have a system to manage the risks. These new features can drive engagement and open up new revenue opportunities (like user-generated marketplaces or premium community access).

- Reduce Operational Costs (which boosts profit): While not direct revenue generation, the significant cost savings from automating moderation with Hive (compared to hiring a large manual team) directly impacts your bottom line. Lower expenses mean higher profits, even if top-line revenue stays the same. This is a crucial financial benefit for any platform business.

Consider a case study (simulated):

“How ‘CommunityConnect’ Increased Revenue by 15% After Integrating Hive Moderation”

CommunityConnect is a niche social platform.

They struggled with overwhelming spam and harassment in comments and private messages.

Their small human moderation team couldn’t keep up.

Users were complaining, and engagement was dropping.

They integrated Hive’s Text Moderation API.

Hive automatically deleted 95% of detected spam and flagged suspicious messages for priority human review.

The platform became noticeably cleaner.

User complaints about spam plummeted.

Engagement metrics (time spent on platform, interactions) increased by 20%.

Existing users became more active.

Word spread that CommunityConnect was a safe place.

New user acquisition accelerated.

With more active users and a growing user base, their ad revenue (their primary income source) increased by 15% within six months.

They didn’t make money *from* Hive, but Hive enabled them to fix core problems that were holding back user engagement and growth, directly leading to higher revenue.

So, making money with Hive Moderation is about leveraging its power to build, scale, and maintain successful online platforms where user safety and content quality are paramount.

It’s a foundational tool for businesses in the user-generated content space.

Limitations and Considerations

Nothing is perfect, right?

Hive Moderation is powerful, but it’s important to know its limits and what to consider before jumping in.

Here are some key points:

Accuracy Isn’t 100%: AI moderation models are highly accurate, but they aren’t flawless. They can produce false positives (flagging innocent content as bad) and false negatives (missing harmful content). Complex language, sarcasm, rapidly evolving slang, or highly contextual content can sometimes trip up the AI. This is why a hybrid approach, combining AI with human review for borderline cases, is essential for most platforms. Relying solely on AI for moderation decisions is risky.

Context Can Be Tricky: AI struggles with nuance and complex context the way a human can understand it. What might be acceptable language in one community (e.g., a gaming community using specific jargon) could be offensive in another. Configuring the AI thresholds and potentially training custom models or dictionaries is necessary to align with your specific community standards. A generic model might not fit perfectly.

Integration Requires Technical Expertise: Hive Moderation is an API-based tool. You need developers to integrate it into your platform, handle the data flow, process the API responses, and build the moderation workflows based on the scores. This isn’t a no-code solution. If you don’t have technical resources, implementing Hive will be challenging.

Cost Scales with Usage: While potentially cheaper than massive manual teams, the usage-based pricing means costs increase directly with content volume. For platforms with unpredictable traffic spikes, managing and forecasting these costs might require careful monitoring. You need to understand your content volume and how the pricing model applies to your specific use case.

Policy Updates and Training: Your community guidelines and policies will evolve. As they do, you may need to adjust your Hive configurations and potentially work with Hive to train models on new types of content or policy violations. This isn’t a set-it-and-forget-it tool; it requires ongoing management and tuning to stay effective.

New Threats Emerge: Spammers and bad actors are constantly finding new ways to bypass moderation systems. AI models need continuous updating and training to keep up with these evolving tactics. While Hive invests in this, no AI system can instantly detect brand new forms of harmful content the moment they appear. There will always be a reactive element to fighting abuse.

Regulatory Compliance: Depending on the type of content and your user base (especially involving minors), there might be legal or regulatory requirements for content moderation that mandate certain processes or human oversight. Ensure your use of Hive complies with all relevant laws. AI is a tool to assist compliance, not necessarily guarantee it on its own.

Potential for Bias: AI models are trained on data. If the training data contains biases, the AI can perpetuate those biases in its moderation decisions. Working with a provider like Hive that is transparent about their data and actively works to mitigate bias is important. Monitoring the AI’s decisions for unintended bias is also crucial.

These aren’t necessarily dealbreakers, but they are realities of using AI for Spam Detection and Content Moderation.

It requires planning, ongoing effort, and a realistic understanding of what the technology can and cannot do on its own.

It’s a powerful tool, but it needs to be part of a larger, well-thought-out moderation strategy that likely includes human oversight.

Manage expectations, and you can build a really effective system.

Final Thoughts

So, where do we land on Hive Moderation for Security and Moderation, particularly Spam Detection and Content Moderation?

It’s clear that manual moderation for any platform with significant user-generated content is unsustainable.

It’s slow, inconsistent, expensive, and brutal on the human moderators.

AI is the necessary path forward.

Hive Moderation is one of the leading platforms offering sophisticated AI models for this exact purpose.

Its ability to handle diverse content types (text, image, video, audio) and detect granular policy violations sets it apart.

The benefits are compelling: major time savings, improved consistency, scalability, faster response times, reduced team burnout, and ultimately, a better user experience which fuels growth and revenue.

It’s not a magic bullet, though.

It requires technical integration.

It’s not 100% accurate and needs human oversight for complex cases.

Costs scale with usage.

But for platforms dealing with volume, these are manageable considerations compared to the alternative of being overwhelmed by harmful content.

If you are building or managing a platform that hosts user-generated content, and Spam Detection and Content Moderation are significant challenges, Hive Moderation is absolutely worth evaluating.

Don’t let the complexity of content moderation hold back your platform’s growth.

Automate the heavy lifting.

It’s a smart investment in the health and safety of your online community.

Think of it as building a strong foundation for scalability and positive user interaction.

If you fit the profile of a potential user, the next step is simple.

Get those free credits they offer.

Test their APIs with your specific content types.

See the accuracy for yourself.

Explore the documentation and understand the integration effort.

Compare the potential cost savings and benefits against your current methods.

See if Hive Moderation is the key to unlocking a cleaner, safer, and more scalable platform for you.

Visit the official Hive Moderation website

Frequently Asked Questions

1. What is Hive Moderation used for?

Hive Moderation is primarily used by online platforms and businesses to automatically detect and filter harmful or unwanted user-generated content.

This includes spam, hate speech, graphic images, violence, and other policy-violating content across text, image, video, and audio formats.

2. Is Hive Moderation free?

Hive Moderation operates on a usage-based pricing model.

They typically offer a free tier or credits for testing and development purposes.

For production use with significant content volume, it is a paid service based on the amount of content processed.

3. How does Hive Moderation compare to other AI tools?

Hive is known for its comprehensive coverage of different content types (image, video, audio, text) and granular detection capabilities for various policy violations.

Comparison with other tools depends on specific needs, but Hive is considered a leading provider in the broad spectrum of AI-powered content moderation.

4. Can beginners use Hive Moderation?

Hive Moderation is designed for developers and businesses with technical resources.

It is an API-based service that needs to be integrated into an existing platform.

It is not a simple plug-and-play tool for non-technical individuals.

5. Does the content created by Hive Moderation meet quality and optimization standards?

Hive Moderation does not create content.

It analyzes existing user-generated content to determine if it meets safety and policy standards.

Its ‘quality’ is measured by its accuracy in identifying harmful content, not by generating polished or optimized material.

6. Can I make money with Hive Moderation?

You don’t directly make money from the tool itself.

Businesses use Hive Moderation to build and maintain clean, safe online platforms.

A safe and clean platform leads to better user retention, increased engagement, and the ability to scale user-generated content features, which in turn can drive revenue through various business models like advertising, subscriptions, or transaction fees.